Theodore Lowe, Ap #867-859 Sit Rd, Azusa New York

AI Software Development

AI Software & Application Development Services for Efficiency & Scale

Engineering high impact AI systems for better unit economics.

Just Drop Us A Line!

We are here to answer your questions 24/7

AI Engineering Excellence for Growth Focused Teams

A Better Way to Approach AI Software & Application Development

Most AI initiatives struggle beyond model choice and enter the territory of the immature system that surrounds the model. Data pipelines, integration paths, governance layers, security boundaries, latency budgets, cost dynamics and user workflows all decide whether AI becomes an asset or an expensive experiment. That’s the part most AI engineering teams underestimate, and the gap we were built to solve.

At Clixlogix, we treat AI more than novelty. We design the architecture before the model, align AI to existing systems, build for observability, enforce governance, and optimize economics from day one. The result is artificial intelligence software development that behaves predictably, scales responsibly, and delivers value you can rely on.

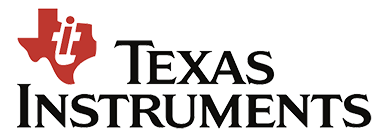

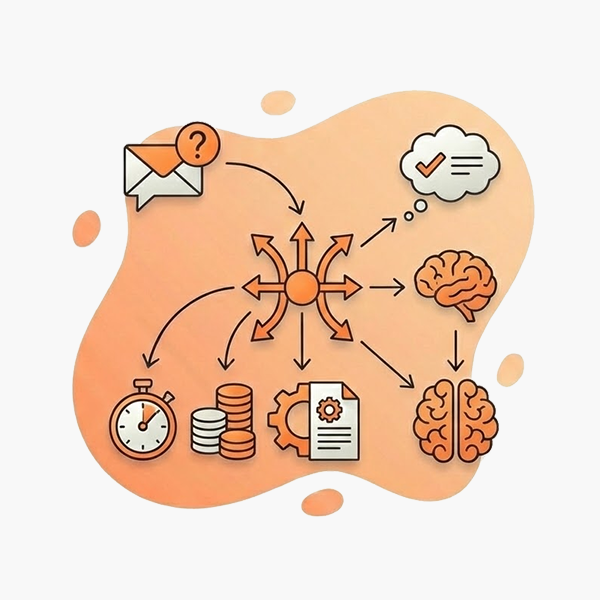

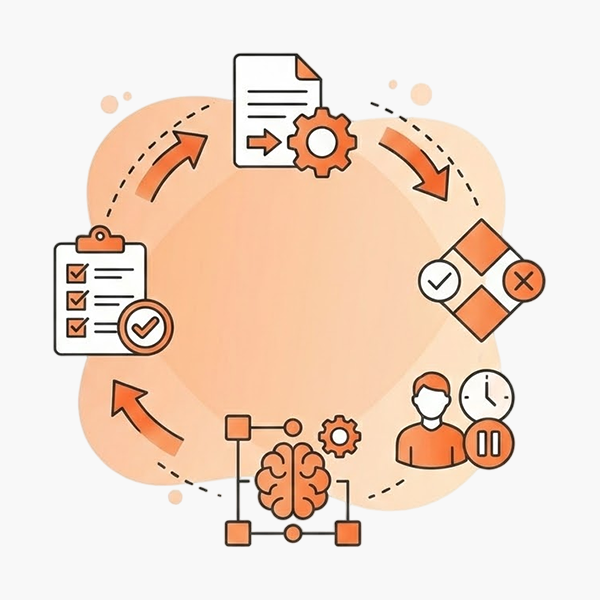

The Four Layer AI Software & Application Development Framework

AI engineering is more than a linear checklist. It is a sequence of progressive validations that tighten risk, sharpen behaviour, and strengthen the system as it grows. Our process is built on one belief – Every AI system must prove its value, safety, and economics at every stage. This creates a delivery discipline that compresses uncertainty early and compounds reliability over time.

Where AI Delivery Falters & How We Hold It Together

| SERVICE PILLAR | WHAT GENERALLY GOES WRONG | HOW WE SUPPORT | THE ECONOMICS |

|---|---|---|---|

| 1. Problem Lineage | Teams target symptoms instead of real work. | We map intent, workflows and friction precisely. | Prevents misscoping and avoids building what won’t be used. |

| 2. Behaviour Definition | Behaviour is assumed, so AI responds poorly. | We define inputs, boundaries and responses early. | Reduces rework and cuts training cycles and tuning effort. |

| 3. Controlled Customization Logic | Roles, tools and data surfaces shift midway. | We stabilise system boundaries with all teams. | Limits integration churn and protects delivery budgets. |

| 4. Reliable Integrations & Data Flow Stability | Advanced reasoning is attempted too soon. | We grow capability in controlled layers. | Ensures effort maps to real value and avoids wasted builds. |

| 5. Data Accuracy & Migration Confidence | AI breaks under real volume or edge cases. | We reinforce continuity, monitoring and stability. | Reduces outages and support load and lowers long term cost. |

Core AI Software & Application Development Offerings

Our AI powered app and software development services cover the full delivery lifecycle. From early consulting to long term system evolution. You can engage us end to end or plug us in to strengthen a specific stage such as AI Integration, AI Workflow Automation, or AI Agent Development.

AI Application Development

Design and build AI applications that reflect your domain logic, operational structure and product goals. Aiming for stable behaviour, performance and features that integrate cleanly into any ecosystem.

AI Integration Services

Our team connects AI models to your CRM, ERP, commerce, and internal tools with clean data pathways and controlled behavior. This ensures AI becomes a reliable part of daily operations.

AI Workflow Automation

Automating repetitive decision flows and operational tasks by combining AI reasoning with deterministic rules. Increasing output per unit while preserving the accuracy and constraints your business needs.

AI Agent Development

We develop task specific agents with defined behaviour, boundaries, and outcome expectations. Supports customer service, operations, and internal teams with predictable execution.

AI Chatbot Development

We create chatbots trained on knowledge base and workflows, with guardrails for reliable responses. They reduce support load, improve resolution speed and keep conversations aligned with your brand and policies.

AI & Data Analytics

We implement AI models and analytics layers that convert ops data into clear insights for forecasting, anomaly detection, cost patterns and performance signals to help teams act faster and with more precision.

Retrieval Augmented AI (RAG)

Construction of retrieval layers that allow AI to use business data accurately, improving reliability for knowledge, support, or operational scenarios.

Model Fine Tuning

Selection and optimization of AI models based on performance, stability, and cost profile. Ensures fit-for-purpose intelligence and controlled operating expense.

Multimodal AI Features

We build AI features that interpret text, images, PDFs or audio to automate complex review tasks, enrich product experiences and reduce manual effort across departments.

AI Governance & Monitoring

We implement monitoring, cost controls, access management and behavioural safeguards so your AI systems remain stable, compliant and economically efficient as usage scales.

Artificial Intelligence Architecture Audit

We assess your workflows, data quality, systems and operational constraints to define where AI can create measurable value. This ensures you invest in the right use cases, not speculative experiments.

AI Project Rescue & Stabilization

We step into troubled AI initiatives that are over budget, misaligned or failing in production. Our team diagnoses the root issues and restores the system to predictable, stable behaviour.

AI Quality Engineering & Testing

We run structured testing for model behaviour, data handling, workflow correctness and edge case responses. This ensures your AI system performs reliably under real world conditions and operational load.

AI Architecture & Design Advisory

We define the architectural structure, retrieval layers, model orchestration, data pathways and governance to ensure your AI solution remains scalable and maintainable as usage grows.

AI Cost & Performance Audit

We analyse your model choices, workload patterns and infrastructure to reduce operating cost while improving latency and accuracy. A clear path to better economics without sacrificing capability.

AI Security & Compliance Review

We evaluate your AI pipelines for access risks, data handling gaps and policy violations, then implement controls that meet internal and regulatory requirements.

Why Companies Choose Clixlogix for AI Software & Application Development

- 7+ years delivering AI, automation and intelligent systems.

- AI solutions for retail, logistics, manufacturing, finance, healthcare, education and SaaS

- Expertise in OpenAI, Google Gemini, Claude, LangChain, Llama, vector databases (Pinecone, Weaviate, Chroma).

- We integrate AI into CRM, ERP, WMS, HRMS, commerce platforms and custom backends.

- Model lifecycle management, prompt governance, behaviour workflows, risk logs and observability dashboards keep delivery steady and predictable.

- Experience with GDPR, SOC 2, ISO 27001, HIPAA aligned workflows, model access audits, data residency constraints and secure migration.

- RAG systems, agentic architectures, fine tuned models, multimodal AI, high volume inference optimisation, intelligent automation and context driven orchestration across enterprise workloads.

We always bring our A game when the stakes are high

Years Experience in IT.

Success Score

Client Return Rate.

Happy Clients.

See how behaviour definition, model governance and structured workflows drive consistent success across AI projects.

Learn how our compliance practices and security controls safeguard sensitive information across AI pipelines.

Advanced Capabilities of AI Software & Application Development

We support AI initiatives that require deeper architectural thinking, stronger governance and higher operational reliability. These capabilities allow AI systems to perform consistently under scale, regulatory and pressure variability.

Multi Agent Orchestration

Design and coordination of agents that collaborate, escalate or hand off tasks within controlled boundaries for complex workflows.

Enterprise RAG Pipelines

Structured retrieval layers with vector indexing, reranking, freshness rules and auditability to ensure grounded, verifiable responses.

Inference Optimization

Batching, caching, routing and model selection strategies to reduce latency and compute cost under heavy production load.

AI Observability

Continuous tracking of model behaviour, response consistency, data drift, cost anomalies and operational health through structured dashboards.

ModelOps

Access controls, activity logs, encrypted pathways, model versioning and policy enforcement across sensitive AI workloads.

Context Routing & Dynamic Prompt Architectures

Systems that assemble context dynamically from multiple data surfaces to ensure accurate, domain appropriate reasoning.

Multi Tenant AI System Design

Architectures that isolate customer data, configuration, prompt paths and memory boundaries for SaaS and platform environments.

Streaming & Event Driven AI Processing

Real time AI pipelines that react to sensor data, IoT events, transactional streams or operational triggers with low latency response paths.

Privacy Preserving AI

Techniques such as minimised data exposure, controlled embeddings, redact before indexing, and compliance ready lineage tracking.

Model Evaluation, Benchmarking & Hardening

Structured performance testing against accuracy, safety, reasoning depth and cost criteria before production rollout.

Teams We Support With AI Software & Application Development Services

AI creates the most value when the system reflects how a team actually operates. We align AI application development services’ delivery with each team’s priorities, so they get clarity, control, and predictability without compromising pace or stability.

Founders & Business Leaders

You are making long-term bets on AI as a driver of growth or efficiency. You need clarity on what AI can realistically deliver, how it affects cost structure, and when returns become visible. We validate opportunities against business model economics before development begins.

Typical focus areas:

- ROI Validation

- Cost Structure Impact

- Governance Frameworks

- Investor Readiness

- Scaling Economics

- Vendor Dependency

Product & Engineering Teams

You are responsible for systems that work in production. You need AI architecture that integrates cleanly with existing infrastructure, remains maintainable as requirements shift, and performs within latency and cost constraints.

Typical focus areas:- Model Selection

- Architecture Design

- Data Pipelines

- Testing Frameworks

- API Integration

- Performance Tuning

Ops, IT & Governance Teams

You inherit AI systems after launch. You need confidence that what enters production remains stable, auditable, and compliant. We build with your requirements from day one, embedding monitoring and rollback capabilities into the architecture.

Typical focus areas:

- Observability

- Audit Trails

- Drift Detection

- Retraining Schedules

- Access Controls

- Incident Response

Industries We Deliver AI Software and Application Development For

AI delivers measurable value when it reflects the workflows, data structures, and compliance pressures of your industry. We bring domain understanding across sectors where intelligent systems improve decision speed, reduce operational cost, and unlock new capabilities.

Manufacturing & Production

Retail & E Commerce

Transportation & Logistics

AI value depends on domain context. See how we apply intelligent systems across sectors you operate in.

What Goes Into a Production of an AI System

Production AI software & application requires more than a model. It requires data infrastructure, integration layers, safety controls, and operational visibility. We design every component to work together, so your system performs under real conditions and remains maintainable as requirements evolve.Intelligence & Data Layer

This is where your AI system ingests information, reasons through context, and generates responses. Getting these components right determines accuracy, relevance, and consistency across every interaction.

Data Ingestion & Preprocessing

Vector Storage & Retrieval

Model Selection & Orchestration

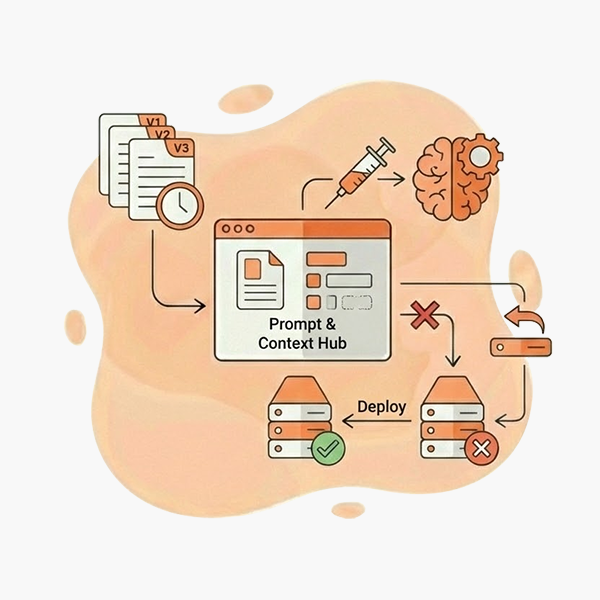

Prompt & Context Management

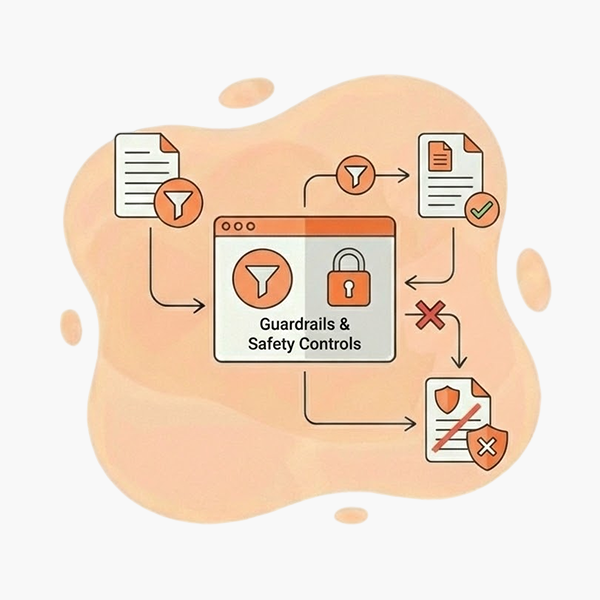

Guardrails & Safety Controls

Input validation and output filtering enforce behavioral boundaries. Responses stay within policy without manual review.

Feedback & Evaluation Loops

Monitoring & Drift Detection

Caching & Response Optimization

Reporting & Analytics

System & Operations Layer

Users, workflows, integrations, and compliance live here. These components ensure your team can manage, scale, and trust the system long after launch.

Auth & Role Management

Admin Dashboards

Workflows & Orchestration

APIs & Integrations

Notifications & Alerts

File & Document Handling

Cost Metering & Billing

Audit Logging & Compliance

Multi Tenancy

Search & Filters

Localization & Personalization

Error Handling & Fallbacks

Why Architecture Decisions Compound

Architecture decisions made in the first few weeks shape cost structure, iteration speed, and operational burden for years. A retrieval layer that works at demo scale may collapse under production load. A prompt system without versioning becomes impossible to debug. An integration built without fallback logic fails when 3rd party APIs change.We design for what happens after launch:

- Modularity to help swap models, add data sources, or extend workflows without rewriting core logic

- Observability to identify issues surface before users report them

- Cost Metering for margin visibility at the request level from day one

- Fallback Logics to manage graceful degradation when dependencies fail

- Version Control always applicable for prompts, models, and configs roll back safely when needed

AI Platforms & Infrastructure We Work With

We build AI Powered systems across leading model providers and infrastructure platforms. Our teams select, integrate, and optimize based on your use case, cost constraints, and long term scalability. Each implementation reflects a deep understanding of how these technologies behave in production environments.

Foundational Model Providers

The large language models and AI platforms we deploy for production workloads.

OpenAI

Anthropic Claude

Google Gemini & Vertex AI

AWS Bedrock & SageMaker

Azure OpenAI & Azure ML

Meta Llama

LLM Orchestration & RAG

Frameworks and tools for building complex AI workflows, agents, and retrieval systems.

LangChain

LlamaIndex

Semantic Kernel

Haystack

LangGraph

CrewAI

Vector Database & Embeddings

Storage and retrieval infrastructure for semantic search and RAG systems.

Pinecone

Managed vector database built for production AI workloads. We use Pinecone for semantic search, recommendation systems, and RAG retrieval layers. Serverless architecture scales automatically without capacity planning. Supports hybrid search combining dense vectors with sparse keyword matching. Best suited for teams who want production-grade vector search without managing database operations, and for applications where retrieval latency and uptime are critical.

Weaviate

Qdrant

OpenAI Embeddings

pgvector

ML Frameworks & Model Development

Core libraries for custom model training, fine tuning, and classical ML.

PyTorch

TensorFlow

Hugging Face

scikit-learn

The standard library for classical machine learning in Python. We use scikit-learn for tabular data tasks including classification, regression, clustering, and dimensionality reduction where deep learning adds complexity without proportional accuracy gains. The consistent API across algorithms enables rapid experimentation with minimal code changes. Builtin tools for preprocessing, feature selection, cross validation, and hyperparameter tuning cover the full model development workflow.

MLOps & Observability

Tools for experiment tracking, model monitoring, and production AI operations.

MLflow

Weights & Biases

LangSmith

Inference & Optimization

Tools for deploying models efficiently at scale with cost control.

vLLM

TensorRT

Modal

Anyscale

ONNX Runtime

Get a structured breakdown of your AI project’s cost based on use case complexity, model selection, integration scope, and deployment approach.

AI Software & Application Development Engagement Models

The right engagement structure depends on where AI sits in your organization today. Teams building their first production system need different support than those scaling existing capabilities or experimenting with new use cases. We’ve worked across all three, and we structure engagements around your current maturity, risk tolerance, and internal capacity rather than forcing a standard model.

| Exploration & Proof of Concept | AI Team Augmentation | TIME & MATERIAL | Fixed Cost | |

|---|---|---|---|---|

| Dedicated Team | On Demand AI Expertise | |||

| When you need to validate feasibility before committing. We scope a focused experiment, select appropriate models, build a working prototype, and deliver clear findings on technical viability, cost structure, and production path. Typical duration is 4 to 8 weeks. | AI engineers integrated into your team on a sustained basis. They work inside your systems, attend your standups, and build context over time. For organizations developing AI capabilities as a core competency. Monthly or quarterly commitments with consistent team composition. | Targeted expertise for specific challenges like model evaluation, prompt optimization, fine tuning, inference cost reduction, compliance preparation, or architecture review. Short engagements, defined deliverables, minimal overhead. Useful when your team has momentum but needs specialized depth. | Pay only for the actual tracked hours spent. Ideal for discovery heavy projects, evolving requirements, integrations, optimization, and enhancement cycles where flexibility matters. Offers transparency and a controlled pace. | Full delivery of a production ready AI system. Covers architecture, model selection, data pipeline design, application development, integration, testing, deployment, and monitoring setup. We own the technical outcome, you own the business direction. Structured milestones with defined checkpoints. |

What Shapes Your AI Software & Application Development Project Investment

AI project economics depend on a combination of technical, organizational, and operational factors. We scope engagements through structured discovery that surfaces these variables early, allowing us to provide estimates grounded in delivery realities rather than assumptions. The frameworks below outline how we assess complexity and structure investment expectations.

Factors That Influence Project Scope

| Factor | What We Assess |

|---|---|

| Use Case Complexity | Single turn vs. multistep reasoning, deterministic vs. probabilistic outputs, accuracy requirements |

| Data Readiness | Availability, quality, structure, access constraints, preprocessing needs |

| Model Requirements | Off the shelf APIs, fine tuned models, custom training, multimodel orchestration |

| Integration Depth | Number of systems, authentication patterns, data synchronization, latency constraints |

| Compliance & Security | Data residency, audit requirements, access controls, industry specific regulations |

| Operational Maturity | Monitoring needs, human in the loop requirements, escalation workflows |

| Internal Capacity | Technical team involvement, decision making velocity, change management readiness |

Representative Project Archetypes

| Archetype | Typical Scope | Timeline | Investment Range |

|---|---|---|---|

| Proof of Concept | Single use case, limited integration, feasibility validation | 4 to 8 weeks | $15,000 to $45,000 |

| Production MVP | Core functionality, primary integrations, deployment ready system | 10 to 16 weeks | $35,000 to $100,000 |

| Enterprise Implementation | Multi workflow system, complex integrations, compliance controls, organizational rollout | 4 to 8 months | $80,000 to $250,000+ |

Understand how your requirements translate into timeline and investment. We scope AI projects based on use case complexity, model architecture, integration depth, and operational needs.

AI System Security & Compliance We Follow

AI systems introduce security and compliance considerations that extend beyond traditional application architecture. Data flows through model providers, prompts may contain sensitive context, outputs require validation, and audit requirements demand full traceability. We engineer systems with these realities built into the foundation, not retrofitted after launch.

How We Secure AI Systems

Security in AI projects requires discipline across the entire delivery lifecycle. These practices are standard across all engagements, regardless of scale or engagement model.- Environment Isolation - Development, staging, and production environments remain fully separated. Model API keys, credentials, and sensitive configurations never cross environment boundaries.

- Data Handling Protocols- Client data used for testing, fine tuning, or evaluation follows documented handling procedures. Access is logged, retention is timebound, and deletion is verifiable.

- Secure Development Standards - Code reviews include security checks for prompt construction, input handling, and output processing. Dependencies are scanned and updated on a defined cadence.

- Credential Management - API keys, tokens, and secrets are stored in secure vaults with automated rotation. No credentials in code repositories, configuration files, or logs.

- Access Governance - Team member access is provisioned on a need-to-know basis and revoked at engagement end. All access changes are logged and auditable.

- Incident Response Readiness - Security incidents follow a defined escalation and communication protocol. Post-incident reviews identify root cause and preventive measures.

Security Architecture Pillars for Production AI Systems

| Security Pillar | How We Configure It in Cloud ERPs | Business Impact |

|---|---|---|

| Data Protection & Privacy | PII detection and redaction before model calls, data residency controls, encryption at rest and in transit, retention policies. | Sensitive information stays within defined boundaries; regulatory exposure reduced. |

| Model Access & Authentication | Role based access controls, API key management, rate limiting, session handling, audit logging of all model interactions. | Clear accountability for system usage; unauthorized access prevented. |

| Prompt & Output Security | Input validation, prompt injection defenses, output filtering, content moderation layers, hallucination detection patterns. | System behaves predictably; harmful or inaccurate outputs caught before reaching users.. |

| Vendor & Infrastructure Security | Secure API configurations, VPC isolation where supported, credential rotation, provider security posture assessment. | Third party risk managed; infrastructure aligned with enterprise security requirements. |

| Auditability & Traceability | Complete logging of prompts, responses, and system decisions; immutable audit trails; exportable compliance records. | Full visibility for internal review and regulatory examination. |

Certifications & Frameworks Behind Our AI Software & Application Development Security

You’re not only relying on the artificial intelligence framework vendor’s cloud security; AI projects also run inside Clixlogix’s own security and compliance program.

EU AI Act

NIST AI RMF

SOC 2 Type II

GDPR

HIPAA

ISO 27001

AI projects introduce data flows and attack surfaces most security frameworks weren’t built for. Our ISO 27001 aligned framework addresses these realities.

AI Software & Application Solutions We Excel At

AI creates value when applied to specific business problems with clear operational context. The solutions below represent implementations we have delivered across industries, each with defined architecture, integration requirements, and measurable outcomes. Organizations exploring AI typically find their use case maps to one or more of these categories.

AI Software Development Case Studies

Hyperlocal, Time Sensitive Cuisine Delivery Platform for Chicago’s Suburban Market

Digital Engineering Food & Beverages

Zero Code Marketplace Platform Modernized for Antique Dealers’ Workflow Automation

Digital Engineering Retail & E-CommerceCustom Business Intelligence Layer for a BMW Dealership in Denmark

Digital Engineering Automotive & Mobility

From Our Blog

Stories of everything that influenced us.

AI in Auto Insurance Agencies: 10 Practical Workflows to Reduce Daily Workload (+ 5 Bonus Workflow)

Auto insurance agencies handle a steady flow of work. Emails arrive throughout the day. Calls are...

Cost Optimization Guide for n8n AI Workflows to Run 30x Cheaper

If you work long enough with n8n and AI, you eventually get that phone call, the one where a clie...

FAQs

AI software and application development costs vary based on complexity, data readiness, and integration requirements. A focused proof of concept typically runs $15,000 to $50,000. Production MVPs with core AI features range from $50,000 to $150,000. Full production systems with custom model development, enterprise integrations, and compliance requirements can reach $150,000 to $500,000 or more. We provide detailed cost breakdowns that separate build costs from ongoing operational expenses like inference, hosting, and model maintenance.

The most common cost drivers are data preparation, integration complexity, and scope evolution. Data work alone can consume 60 to 80 percent of project effort when datasets require cleaning, labeling, or augmentation. Integration with legacy systems often reveals undocumented dependencies. Scope changes mid project, especially around model accuracy targets, add cycles. We address this through structured discovery, explicit assumptions in estimates, and milestone-based delivery that surfaces issues early.

Production AI incurs recurring expenses beyond initial development. These include inference costs (API usage or compute for self-hosted models), cloud infrastructure, monitoring and observability, periodic retraining, and support. Annual maintenance typically ranges from 15 to 25 percent of the initial build cost. We design systems with cost visibility built in, so you can track spend per user, per query, or per transaction and optimize accordingly.

Timelines depend on scope and starting conditions. A proof of concept with available data typically takes 4 to 8 weeks. A production MVP ranges from 3 to 5 months. Enterprise systems with compliance requirements, multiple integrations, and organizational change management can extend to 9 to 12 months. We scope in phases with defined milestones, so you have working outputs at each stage rather than waiting for a single delivery.

A proof of concept validates whether AI can solve the problem with your data. It tests feasibility, not usability. An MVP is a functional system with core AI features, deployed to real users for feedback. It works but may lack scale or polish. A production system is fully engineered for reliability, security, and performance under load. Most projects move through all three stages, though timelines and investment increase at each level.

Industry data suggests that 70 to 90 percent of AI initiatives stall before deployment. Common causes include unclear problem definition, insufficient data quality, unrealistic accuracy expectations, and lack of integration planning. We mitigate these through structured discovery that validates feasibility before committing to build, clear success metrics defined upfront, and phased delivery that surfaces blockers early rather than at final delivery.

Pretrained models from providers like OpenAI, Anthropic, or open-source alternatives handle most business applications and offer faster time to value with lower upfront cost. Custom models make sense when you have proprietary data that creates competitive advantage, domain specific accuracy requirements that general models cannot meet, or cost constraints that favor lower inference expenses over higher training investment. We help you evaluate this trade off based on your specific use case and long term economics.

We design systems with abstraction layers that allow model swapping without rebuilding the application. This includes standardized prompt templates, model agnostic APIs, and evaluation frameworks that benchmark alternatives. When using proprietary models, we ensure you retain ownership of fine tuning data and system logic. If a provider changes pricing or deprecates a model, you have a documented migration path. We remain model-agnostic and recommend based on your requirements, not our partnerships.

Model underperformance is a known risk in AI development. We address this by defining clear performance metrics before development, testing against representative data during build, and establishing fallback behaviors for edge cases. If accuracy targets are not met, options include additional training data, alternative model architectures, hybrid approaches combining AI with rules based logic, or scope adjustment. Our phased approach surfaces performance issues during proof of concept, before significant investment.

You retain full ownership of all custom work, including trained models, fine-tuning data, prompts, application code, and generated outputs. We do not retain rights to your proprietary systems or data. Our standard agreements include explicit IP assignment clauses. For projects using third-party foundation models, we clarify licensing terms upfront so you understand what is yours and what remains with the model provider.

We implement data handling protocols based on sensitivity classification. Options include on-premise deployment, private cloud instances with regional data residency, data anonymization before model training, and role-based access controls. For systems using external model APIs, we configure data processing agreements and verify that inputs are not used for provider model training. Our security practices align with SOC 2, GDPR, HIPAA, and ISO 27001 requirements depending on your industry.

We build AI powered systems that meet regulatory requirements including GDPR, HIPAA, SOC 2, ISO 27001, and emerging AI-specific regulations like the EU AI Act. This includes audit trails for model inputs and outputs, explainability documentation for high-risk decisions, bias testing protocols, data retention policies, and human in the loop workflows where required. Compliance is designed into the architecture from the start, not retrofitted before launch.

Have a project in mind ?

We'd love to help make your ideas into reality.