Theodore Lowe, Ap #867-859 Sit Rd, Azusa New York

Theodore Lowe, Ap #867-859 Sit Rd, Azusa New York

A systems driven deep dive for business and technical leaders building AI into Zoho.

If you’ve tried integrating ChatGPT with Zoho CRM, you already know this story.

You start with optimism. Surely this is straightforward. A quick POST call. Maybe a webhook. A bit of Deluge. The internet is full of breezy Zoho & ChatGPT integration in 10 minute videos, and every second LinkedIn post makes it sound like two lines of code and a handshake.

But the moment you try it inside a real Zoho environment, the optimism evaporates.

Suddenly the function arguments come through as {}, the payload disappears, or you hit a mysterious 401 right when the GPT output is supposed to reach CRM. You try an OAuth token. It expires. You refresh it. It still fails. You switch to API key mode. It works in “Preview,” but dies in production. You open the Zoho logs and see nothing.

Or worse, you see the function triggered but with an empty body.

And at some point, someone on your team asks the dreaded question:

“Does Zoho even allow ChatGPT to call it?”

This blog exists because that question is the exact fault line where most teams realize they’ve been attempting the wrong architecture altogether.

This is the part where things get uncomfortable. Not because Zoho is flawed. Not because ChatGPT is limited. But because the real integration model is the opposite of what most teams assume.

This blog is the download many teams wish they had before sinking hours into OAuth attempts, inbound function experiments, and horror movie debugging sessions.

You’re getting the actual working model, the one we deploy across CRM, Desk, Creator, Books, and custom integrations. The one that scales. The one that isn’t fragile. The one we stand behind in production.

Let’s start by addressing the root confusion, because every broken integration traces back to the exact same misunderstanding.

Most SaaS platforms expose a single, unified layer for inbound automation. Zoho doesn’t. Zoho operates in two separate architectural universes, and unless you understand the difference, you will continue designing the wrong direct integration model.

Let’s walk through them.

This is the public layer everyone knows. It’s how you create or update CRM records, query modules, search, insert notes, send emails, and fetch metadata. It requires:

This world is designed for servers, not for ChatGPT. And here’s the problem, GPT cannot complete OAuth. It cannot store your refresh tokens. It cannot rotate tokens without a secure backend. It cannot manage Zoho’s 1 hour expiry cycle.

Expecting GPT to call Zoho’s REST API directly is still unrealistic. GPT has no secure storage, token rotation, or long-lived state. A mediator, like MCP, must handle the OAuth lifecycle.

This world looks similar at first…until you try to call it from outside Zoho. Zoho Function URLs can be generated and technically accept POSTs, but they behave like internal hooks. They are not designed to accept external payloads the same way a webhook endpoint would.

You will commonly see:

These functions are meant to be called from inside Zoho, from CRM workflows, Creator workflows, Blueprints, Desk events, Flow blocks. They are part of Zoho’s internal plumbing.

So we arrive at a simple but important truth:

GPT cannot call Zoho’s REST API (because of OAuth).

GPT cannot call Zoho Functions (because they are internal).

Therefore GPT cannot talk to Zoho directly. This is where most teams finally realize that the integration direction they assumed, GPT to Zoho direct, is the one model that almost never works.

And here’s the part that catches most teams off guard, GPT simply wasn’t designed to directly interact with systems that require secure state, credential storage, or strict payload shaping. It has no long-term memory, no secure storage, and no way to manage OAuth reliably.

That’s why every stable integration introduces a middle layer, something that can hold secrets, manage refresh cycles, validate payloads, and protect Zoho from malformed or excessive requests.

Sometimes this mediator is Zoho’s own MCP layer (where available). Sometimes it’s n8n. Sometimes it’s Zoho Flow. Sometimes it’s a lightweight Node.js proxy. Sometimes it’s a full internal middleware service.

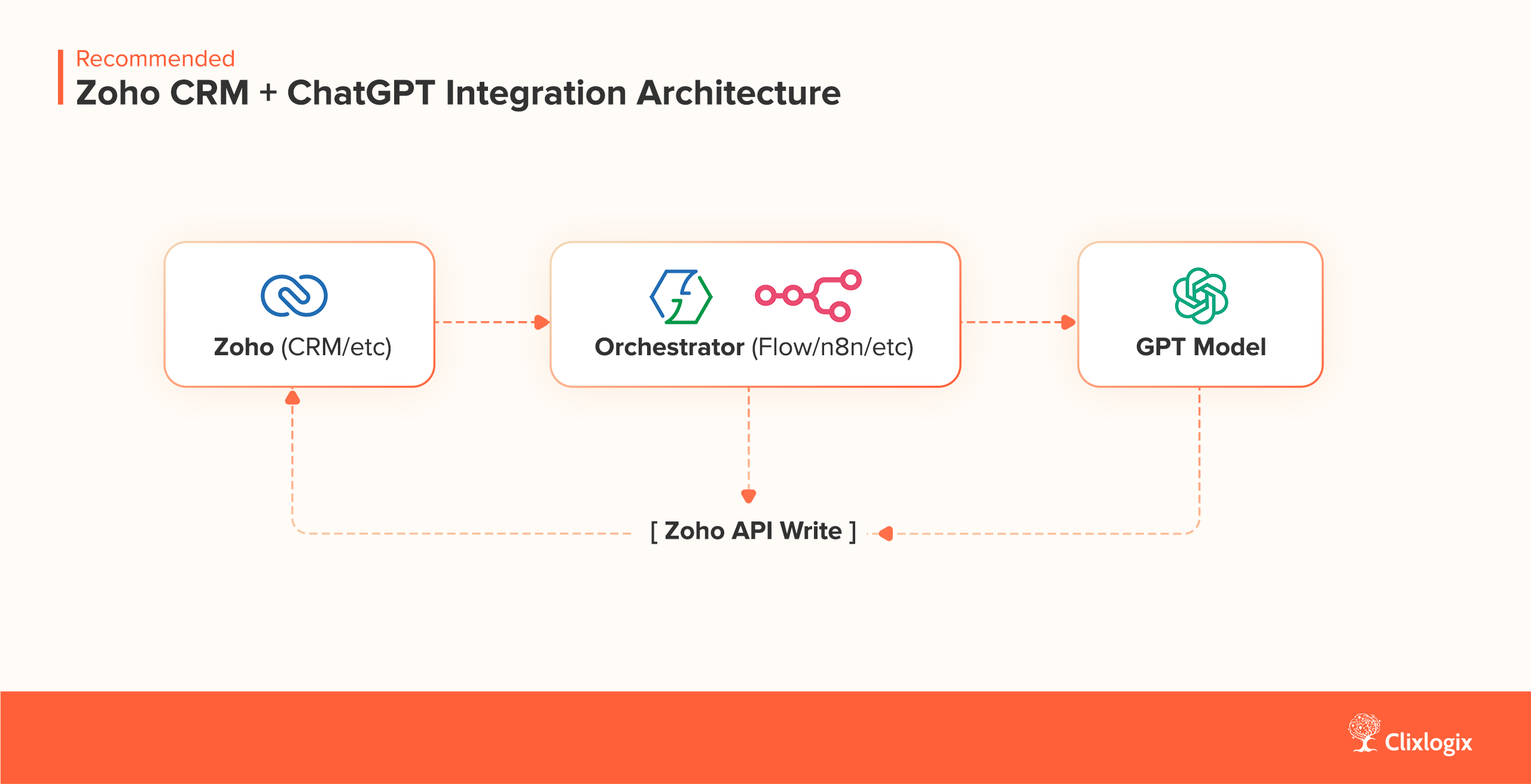

GPT calls the mediator then the mediator calls Zoho with proper authentication then Zoho returns data then the mediator returns structured output back to GPT.

Without this middle layer, direct GPT to Zoho calls remain brittle, high-risk, and operationally expensive.

Zoho enforces API quotas and rate limits that vary by plan and resource usage. When a backend system initiates the calls, these limits remain predictable. But when GPT sits upstream, issuing multiple writes, retries, or step-based reasoning sequences, the volume becomes unpredictable.

GPT cannot:

A single misinterpreted prompt can trigger dozens of updates. A looping prompt can trigger hundreds. And an unbounded chain of thought sequence can trigger thousands.

Once limits are hit, Zoho temporarily stops accepting API calls until the quota resets. This is manageable when Zoho initiates the workflow. It’s a liability when GPT is in charge.

Another reason the safe pattern is always the same:

“Zoho should trigger the workflow. GPT should enrich the workflow. A mediator should own the API surface.”

The surprising part is that Zoho inbound calls fail. This frustrates both engineering and business teams, because you don’t get the comfort of a noisy error. You just… get nothing. Teams often see:

This is why so many companies think their integration “almost works.” It doesn’t. It never did.

The underlying reason is that Zoho is structured in ways that make inbound calls fragile unless they follow very specific rules:

Zoho isn’t trying to frustrate you. Zoho is trying to protect your CRM from uncontrolled inbound calls.

But the reality is this:

“Direct inbound architecture is a dead end. The only reliable inbound path is a mediated one, via MCP or a proxy. Outbound architecture (Zoho to GPT to Zoho) remains the simplest and most stable pattern.”

And that takes us to the architectural inversion that fixes everything.

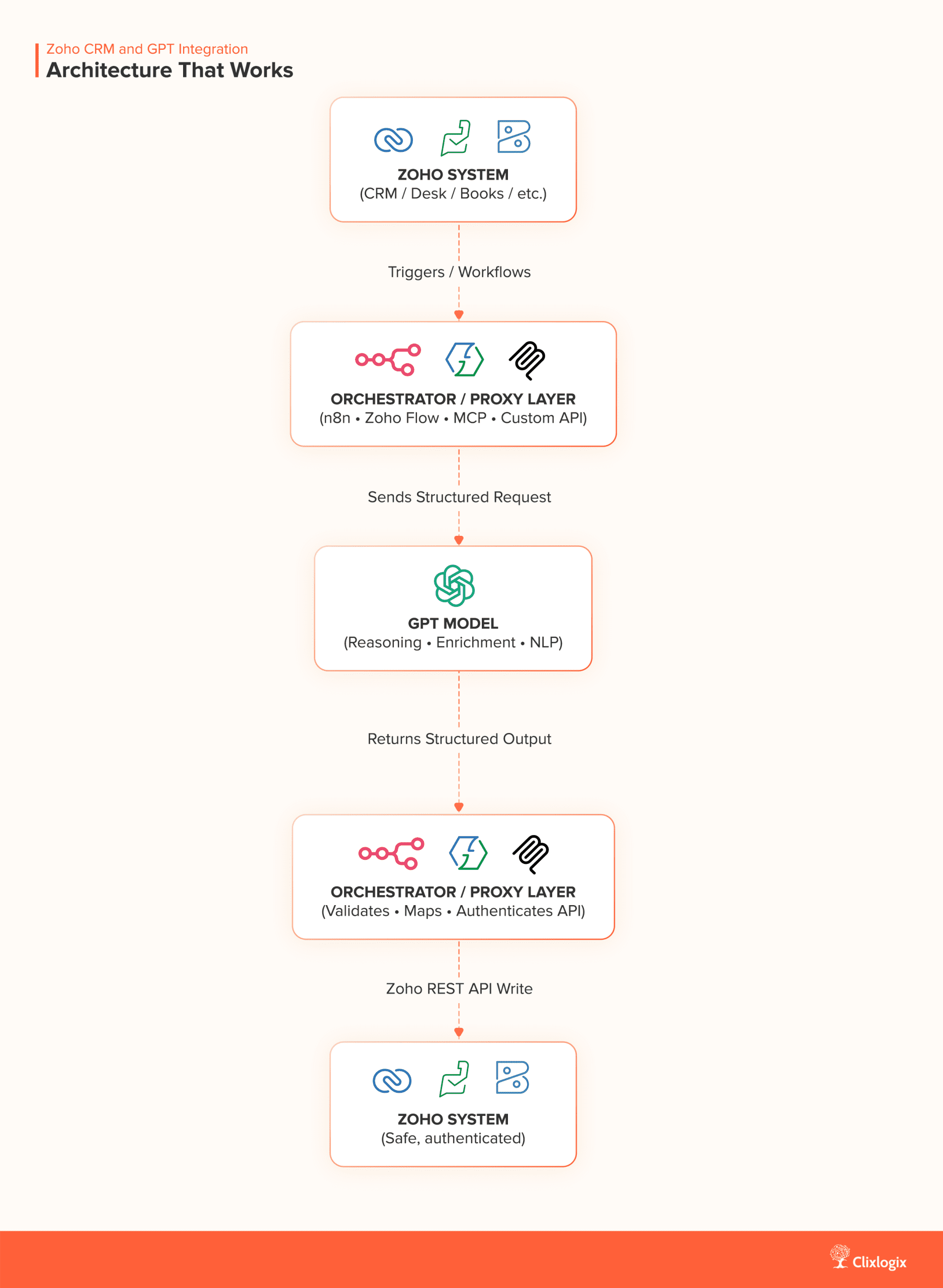

The working architecture looks like this:

(Zoho triggers, GPT responds, Zoho writes back)

More concretely:

Let’s unpack why this works so consistently.

Zoho is the authenticated system of record. It knows how to:

GPT never touches your CRM directly. It never sees OAuth tokens. It never holds a refresh token.

You avoid:

Instead you get:

This architecture creates a very clean cost profile:

No retry storms. No orphaned webhook calls. No repeated OAuth failures. No accidental infinite loops. The architecture is boring, and boring is exactly what you want in production.

Most teams assume, “We’ll need a server for this.” You might. But most of the time, you won’t.

Zoho’s new MCP layer is, in fact, a formal version of this mediator. MCP holds the tokens, manages authentication, validates schemas, and safely exposes tools to GPT. If you’re using MCP, you don’t need to build your own proxy.

In these cases, the integration is entirely serverless.

This includes situations where:

In these cases, the proxy does a few important things:

In other words:

“When GPT must reach Zoho first then we use a middleware / proxy. When Zoho reaches GPT first then we use no proxy.”

This is the part of the story where theory gives way to what actually survives contact with production. Over the last few years, across insurance, wellness, financial services, education, real estate, and industrial operations, a huge array of AI enabled workflows have appeared inside Zoho systems we work with.

It doesn’t matter whether the CRM is heavily customized or the business runs a fairly vanilla setup, these use cases solve operational problems because they map cleanly onto Zoho’s architecture.

Each of the following use cases exists for a very practical reason – it reduces a measurable amount of human effort, it avoids the architectural traps that make integrations brittle, and it produces business outcomes that leadership can actually feel.

And importantly, each one respects what Zoho is great at (structured workflows, internal triggers, secure updates) and what GPT is great at (summarization, classification, reasoning, enrichment).

Let’s walk through these use cases one by one.

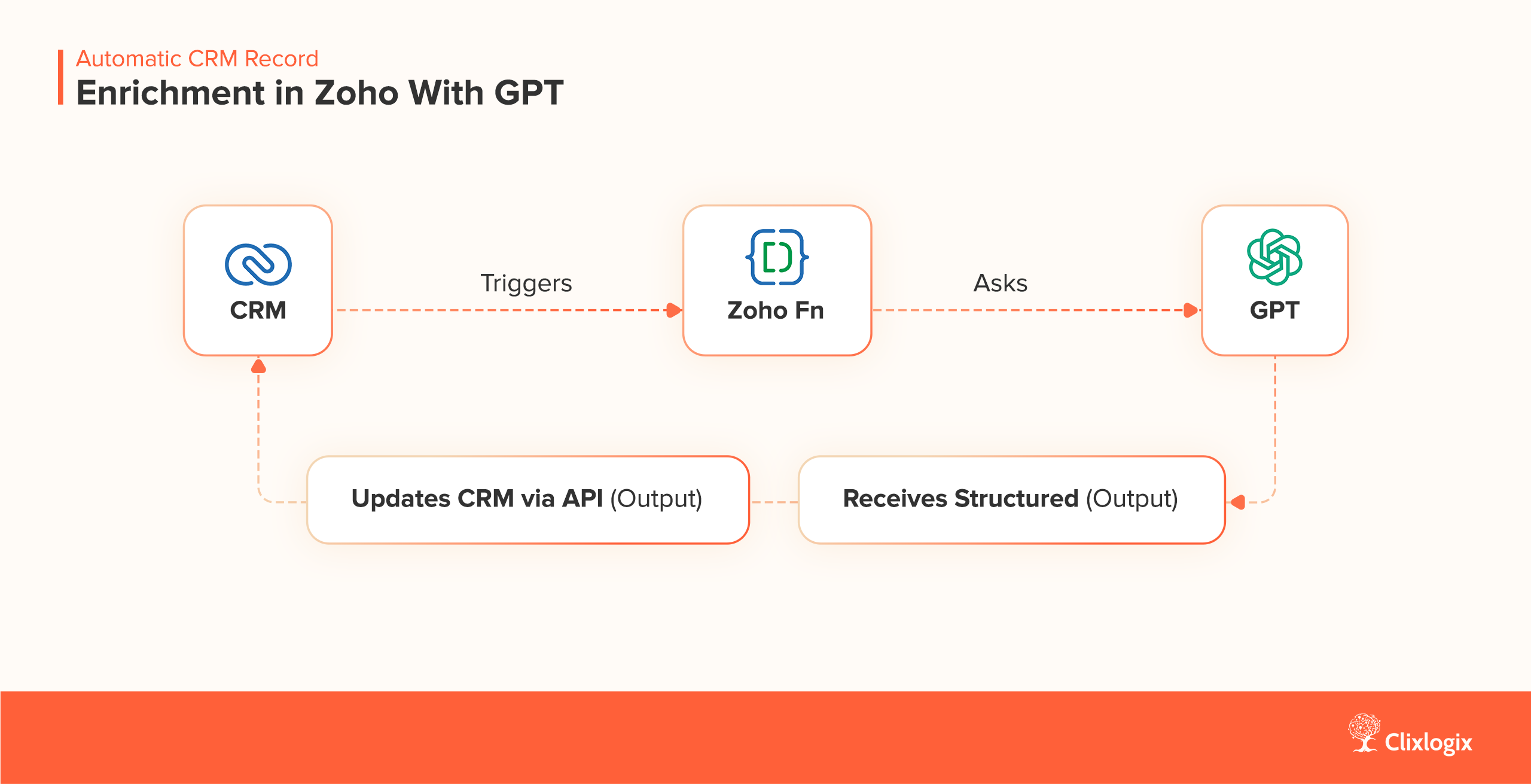

When a new lead enters Zoho CRM, the last thing your team wants is another five minutes of manual classification – What industry is this? Does this contact look like an ICP fit? Is the intent clear? Does the person seem ready to buy or just browsing? What fields are missing that could be filled with context clues?

This is where GPT becomes the assistant that nobody sees but everyone benefits from. The workflow begins in Zoho itself, because that’s where the record is born, and then moves out to GPT for interpretation before returning to CRM with structured updates Zoho can trust.

The flow looks like this inside your system:

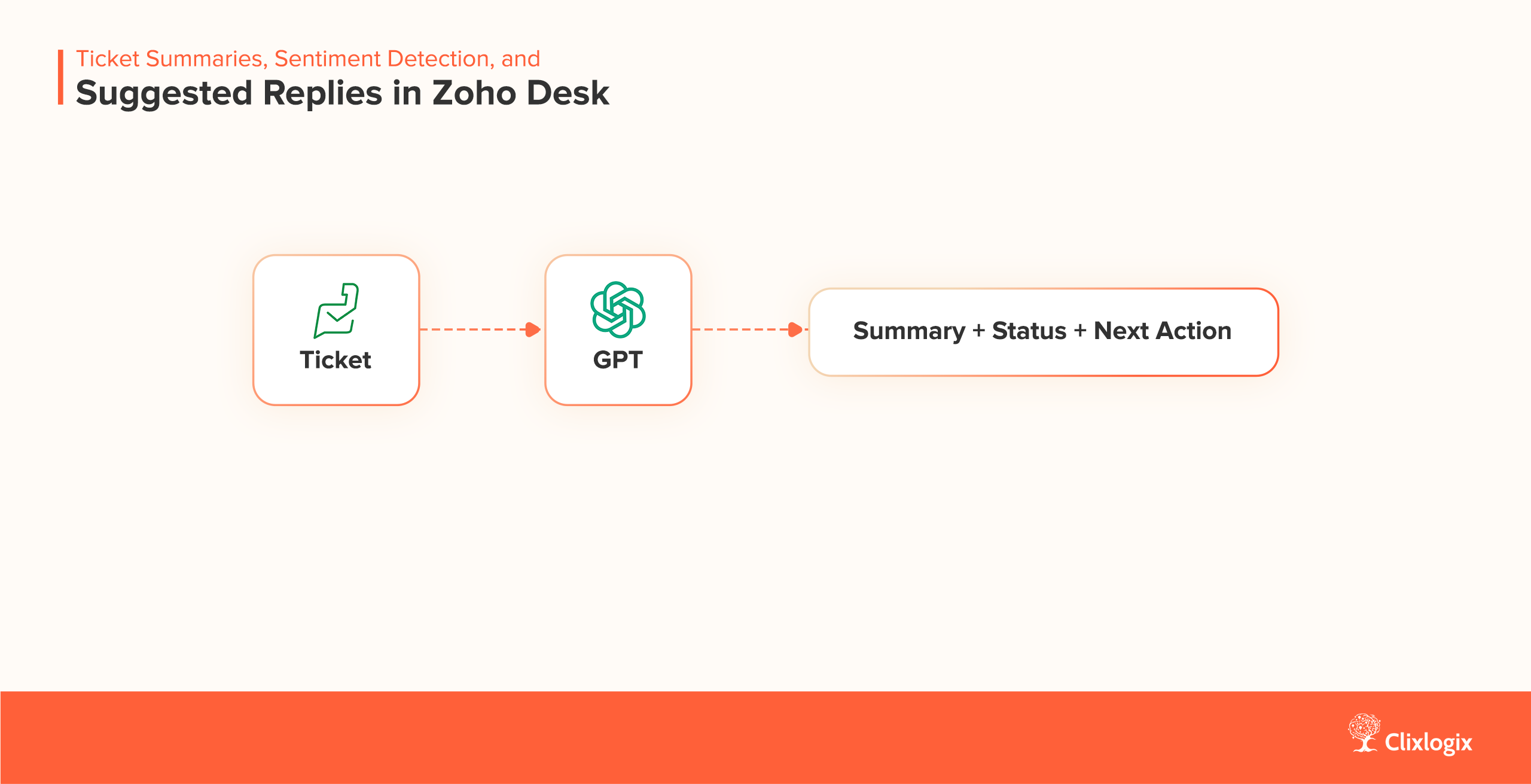

Support teams drown not because of the number of tickets but because of the cognitive overhead required to understand each one. Every ticket comes with its own context, emotional tone, urgency level, and set of underlying issues. Before an agent even starts solving the problem, they must first decode it, and that decoding step is what AI is so good at.

In this workflow, Zoho Desk initiates the process the moment a ticket arrives or updates. GPT then takes over the interpretation, summarizing the ticket in human friendly language, detecting sentiment so the agent knows how sensitive the tone should be, and suggesting replies that match the organization’s communication guidelines.

The sequence looks like this:

This use case delivers compounding returns because it improves consistency. Responses become clearer. Triage becomes easier. Agents avoid fatigue because the cognitive load is noticeably lower. And the cost stays minimal because ticket summaries are short and predictable.

For many organizations, this use case is the first time support teams say,

“This AI thing actually makes my day better.”

Email is the most unstructured, chaotic input channel any business has. People send requests, updates, documents, complaints, opportunities, sometimes all in the same thread. Humans are good at interpreting this mess. CRMs are not.

That’s where GPT becomes the interpreter. This workflow examines every inbound email, determines what the sender is trying to accomplish, extracts meaningful entities, identifies whether it belongs in CRM, and prepares the information in a form Zoho can safely ingest.

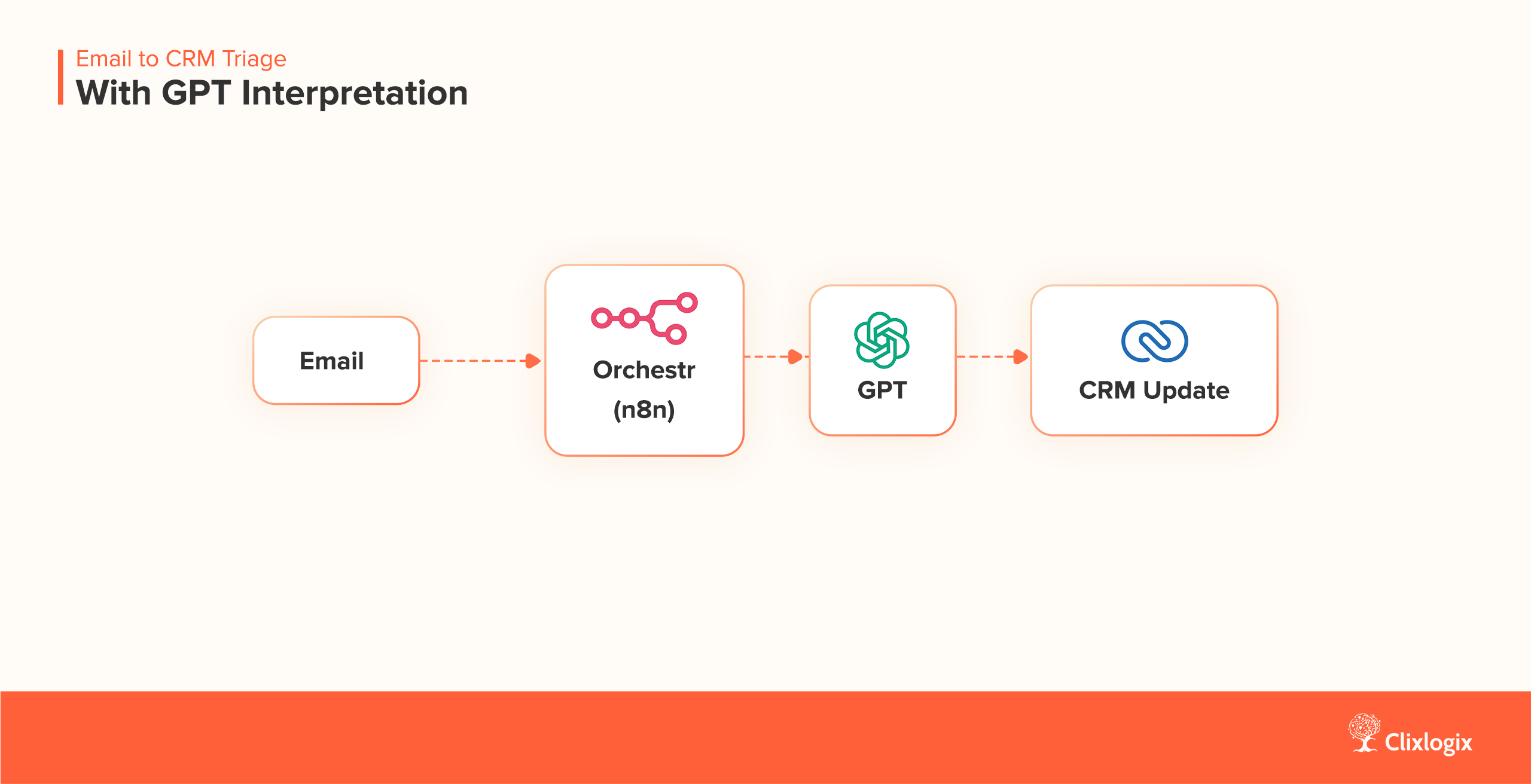

This is usually orchestrated through n8n or Zoho Flow:

This is one of those use cases that leadership feels instantly because CRM hygiene improves on autopilot. Leads don’t get buried. Opportunities don’t get missed. Tasks don’t get lost in an inbox. Instead, the CRM starts acting like a thinking assistant that identifies what belongs where, and routes it accordingly.

The AI bridges a gap the CRM was never designed to close.

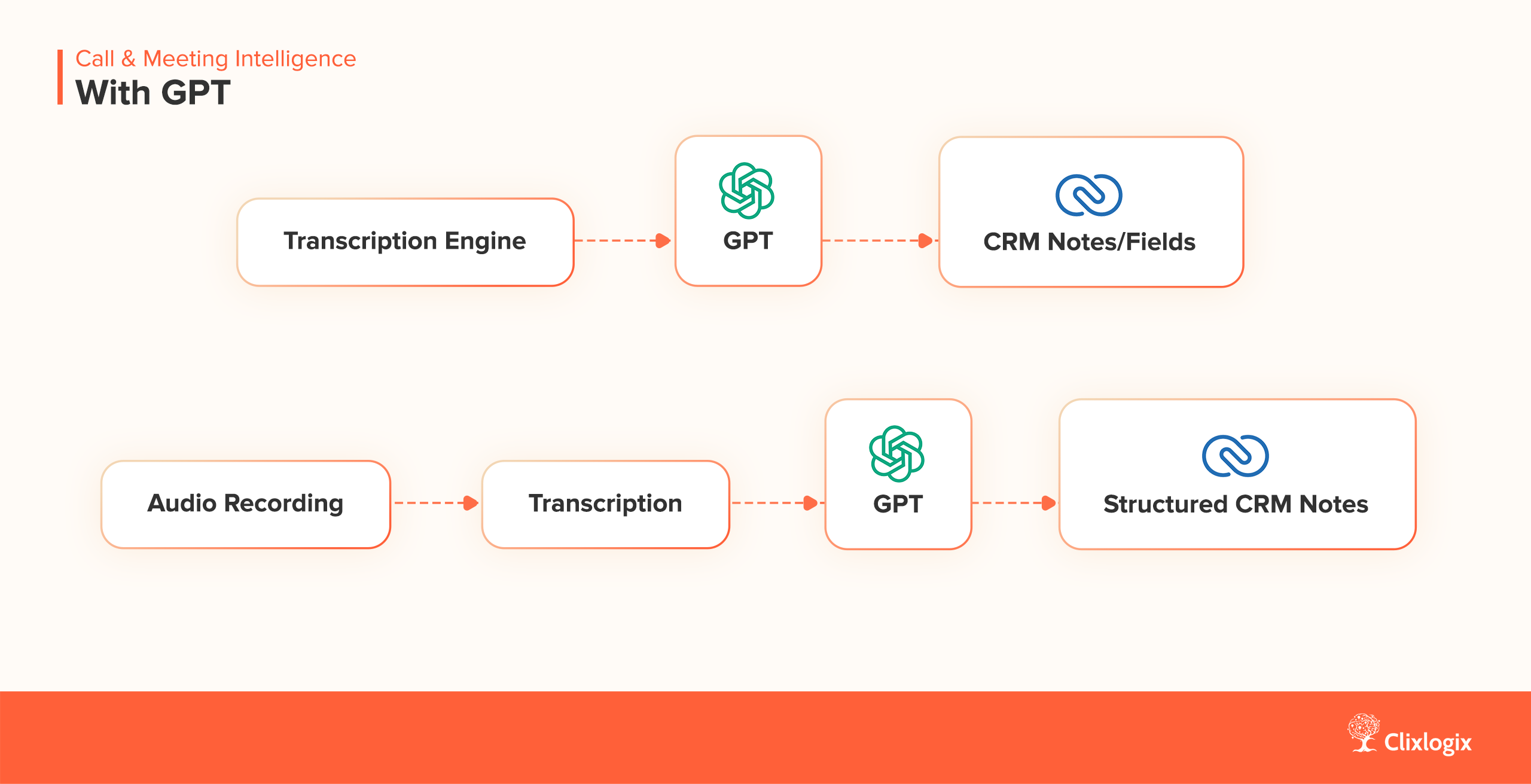

As companies mature in their AI adoption, they start looking beyond text and email. They want more context awareness inside CRM, what actually happened on that discovery call, what objections came up during the negotiation, what action items were agreed upon, and what risks the rep heard but didn’t record.

Call transcripts and meeting recordings are full of information that never makes it into CRM because humans simply don’t have the time to convert a 30-minute conversation into structured notes. GPT excels at doing exactly that.

Here’s the high level workflow:

This use case is typically more expensive than the earlier ones because transcripts are long. But for sales teams, the return is dramatic. Managers get better visibility. Reps get cleaner notes. Forecasting improves because deals are documented truthfully. And the CRM stops being a graveyard of half written call summaries.

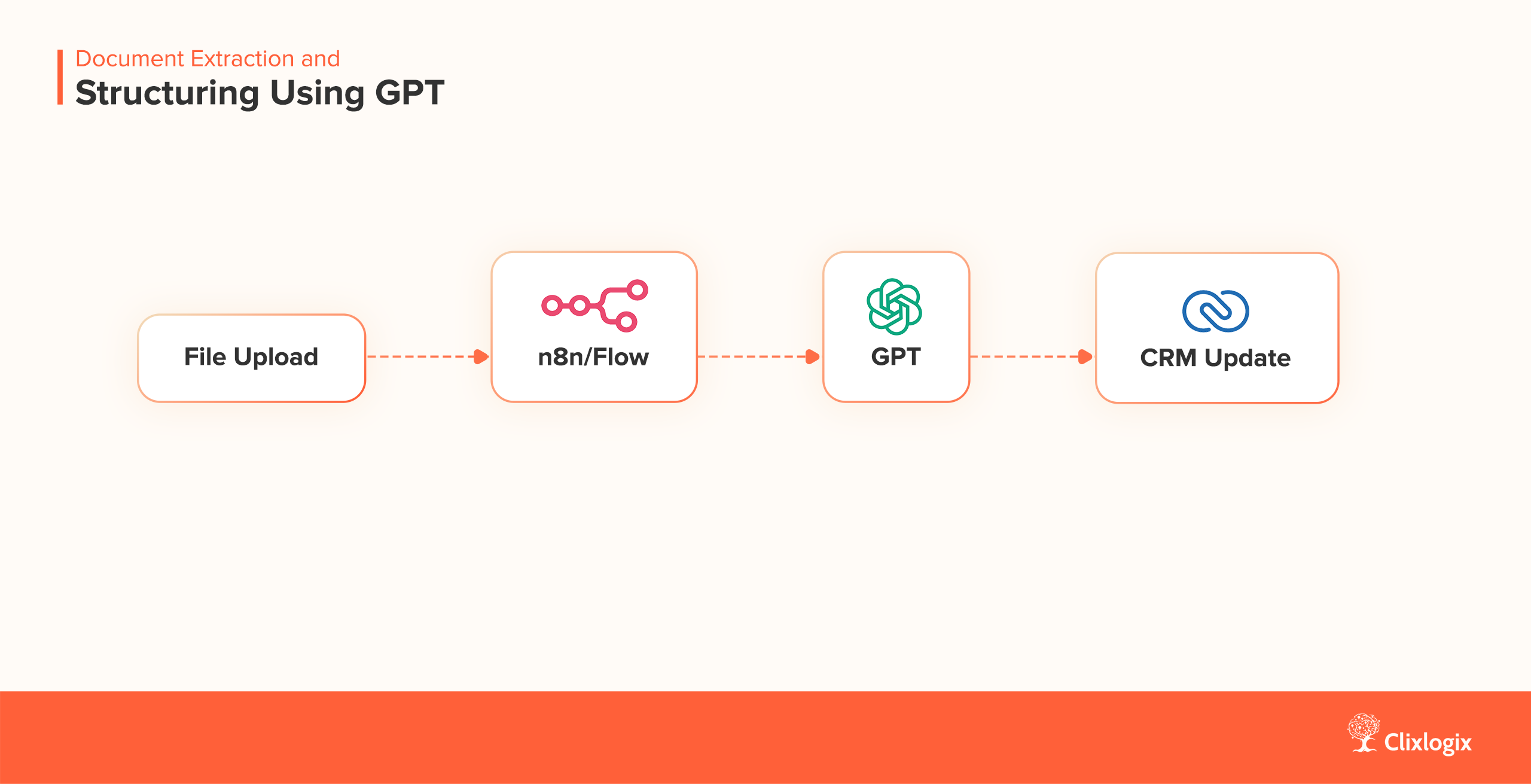

Every business has some form of document heavy workflow, contracts, application forms, onboarding packets, bank statements, proposals, resumes, compliance documents. These come in as PDFs, scanned images, or long text, none of which plug naturally into CRM fields.

GPT is particularly powerful in this context because it transforms unstructured content into structured, CRM ready information. This includes pulling out deadlines, identifying clauses, summarizing risks, flagging anomalies, and extracting key details that normally require human review.

The system flow tends to look like this:

Organizations often adopt this use case after they’ve seen success with simpler ones because it amplifies productivity. Teams that previously spent hours reviewing documents now spend minutes validating AI extracted output. And even with moderate GPT costs, the efficiency gain is almost always worth it, because the business finally unlocks the information trapped in documents.

This is the uncomfortable chapter of the story, the part teams don’t talk about publicly but spend weeks wrestling with internally. Every failed/delayed Zoho & GPT integration has roots in a handful of architectural missteps.

The symptoms vary, the error messages differ, the logs are inconsistent, but the root causes are shockingly predictable. Zoho has very clear boundaries about what it can call, how it can be called, and what it will accept from outside its own ecosystem.

GPT has its own constraints around tokens, state, and security. When teams try to stitch the two together without respecting those boundaries, the system breaks.

This is why these traps are so dangerous. They don’t announce themselves. They just erode stability until the whole integration becomes unreliable. Let’s go through the major ones in detail.

This is by far the most common mistake, and it happens because on paper it looks simple: “I’ll just give GPT the OAuth URL and let it call Zoho’s API directly.” The problem is that OAuth isn’t a one time handshake. It’s a lifecycle. Zoho issues access tokens that expire quickly. It expects the caller to store refresh tokens securely and rotate them. It expects state management, client secrets, and scope handling.

This is why GPT requires a mediator like MCP. It doesn’t have a secure token vault. It cannot perform a multi step OAuth exchange safely.

Even if you hack together a temporary solution and get GPT to call Zoho once, it will break the moment the access token expires. And because GPT can’t rotate tokens, every call becomes a gamble. This isn’t a limitation of Zoho, it’s a misuse of GPT. The caller has to be a system capable of securely holding secrets. GPT is not that system.

Zoho Functions, especially those generated from CRM or Creator, can look like normal external endpoints. They accept POST requests, they show an example URL, and they don’t warn you about their internal restrictions. So teams assume they can send JSON payloads straight from GPT actions or external systems.

But Zoho Functions don’t behave like public REST endpoints. Inside Zoho’s world, functions expect parameters injected from the platform (workflows, triggers, Deluge map objects). When an external POST arrives, Zoho drops or empties the body. Sometimes fragments appear, sometimes nothing binds, and sometimes the entire request disappears without explanation. Debugging this is maddening because the function logs show the function was executed, yet every variable inside it is empty. This is how the system is designed. Function URLs are not meant to be called from outside Zoho.

This mistake usually comes from good intentions, teams want to simplify early experimentation. So they take an access token, paste it into a GPT action, or into a Flow block, or into some external snippet. It works once or twice. It feels promising. Then it suddenly fails. Zoho access tokens expire within an hour, and refresh tokens have restrictions on reuse.

The moment that token rotates, everything collapses. And because that token is now living in multiple places, it becomes impossible to control or trace. You end up chasing down where the old token lives, updating everything manually, and apologizing to your internal stakeholders for downtime that shouldn’t have existed in the first place. Hardcoding secrets is one of those shortcuts that always feels harmless until it explodes.

Even if you somehow manage to bypass the authentication hurdles and get GPT to write directly into CRM modules, you inherit an entirely different category of risk. GPT doesn’t understand module structures, validation rules, picklist constraints, required fields, or business logic. Zoho does.

GPT will submit the wrong types, break lookups, mismatch picklists, and ignore hidden validation rules. Worse, everything it writes bypasses your audit trails, because the system doesn’t see a Zoho user performing the action, it sees an untrusted inbound request. This leads to corrupted records, missing history, and inconsistent data that no dashboard can fully trust. If your CRM is the system of record, letting GPT write directly is one of the fastest ways to degrade its integrity.

Zoho Flow is a great orchestrator when it’s used for what it was built for: short, branching workflows that connect apps and automate simple sequences. But Flow isn’t designed to act as a token managing middleware layer sitting between GPT and Zoho. Long running flows, multi branch decisioning, schema validation, complex error handling, token rotation, and retry logic are all areas where Flow struggles.

Teams often push Flow into this role because it’s convenient and already part of their Zoho stack. But the moment the workflow involves secure token management or multistep API logic, Flow becomes brittle. It will time out, consume unnecessary task counts, break without logging enough detail, or fail to maintain a reliable token refresh cycle. Flow is an automation tool, not a security boundary. Using it as a middleware / proxy is like using a Swiss Army knife as a load bearing beam. It works for a bit, but not for long.

Every one of these mistakes creates long term consequences that aren’t obvious at the beginning. The integration might work in a two hour prototype but collapses under real traffic. The CRM might accept early data but slowly accumulate inconsistencies. Teams end up duct taping solutions until the system becomes an unmaintainable mess that nobody wants to touch.

The organizations that steer clear of these traps tend to ship Zoho & GPT workflows that remain stable for years. The ones that don’t end up refactoring half their automation stack within twelve months.

“A good Architecture is what continues working when nobody is watching.”

This table gives a high level visual of the traps above, designed to help teams recognize them early.

| What Teams Commonly Attempt (Incorrect) | What Actually Works (Correct Architecture) |

|---|---|

| GPT calling Zoho OAuth endpoints | Either (a) Zoho initiates the call itself (Zoho -> GPT -> Zoho), or (b) GPT calls a secure mediator layer such as Zoho MCP or your own proxy. The mediator handles OAuth, token rotation, schema validation, retries, and the actual API call into Zoho. |

| Posting directly into Zoho Function URLs | Use an orchestrator like n8n or Zoho Flow to trigger GPT externally, then write back using the Zoho REST API with controlled credentials.. |

| Hardcoding Zoho access tokens | Store tokens in a secure middleware proxy (a lightweight token handler) that manages refresh cycles and forwards authenticated requests. |

| Letting GPT write directly into CRM modules | Let Zoho perform the final write. GPT only returns structured data, and Zoho validates everything before updating records. |

| Using Zoho Flow as a full proxy layer | Use Flow for simple orchestrations, but handle token refresh, retries, and branching in either n8n or a minimal OAuth proxy. |

Below is the simplest possible representation of system design that always works, across every Zoho product:

This diagram is the ground truth. If your architecture matches this shape, you’re on solid ground. If not, you’re probably building on sand.

In the end, the real unlock is about adopting an integration mindset that respects how modern systems behave. When organizations stop forcing tools to act outside their design and instead align them to their natural strengths, the workflows become boring in the best possible way. Predictable. Traceable. Maintainable. The kind of architecture future teams can look at without wincing.

Zoho plays the role of the source of truth. GPT plays the role of the reasoning layer. And the glue in between, the orchestrator or the tiny proxy aka middleware, keeps the whole system honest. Zoho MCP is simply Zoho’s official implementation of this, a mediator that lets GPT reason while Zoho controls the write path. It’s a simple configuration, but it’s the one that holds up as volume grows, teams scale, and real business logic starts flowing through the pipes.

Once you build AI into Zoho this way, something subtle but powerful shifts, the CRM stops being a passive database and starts behaving more like a thinking partner. It’s not flashy. It’s not magic. It’s just the result of getting the architecture right.

As CEO of Clixlogix, Pushker helps companies turn messy operations into scalable systems with mobile apps, Zoho, and AI agents. He writes about growth, automation, and the playbooks that actually work.

IntroductionMissed calls are a recurring issue for many service businesses. In restaurants, it means lost reservations. In law firms, it means missed clients. In healthcare,...

Store owners in Shopify have noticed something unsettling. Traffic patterns are changing. Pages that used to rank well are getting fewer clicks, even though rankings...

Auto insurance agencies handle a steady flow of work. Emails arrive throughout the day. Calls are returned. Documents are reviewed and filed. Renewals move in...

If you work long enough with n8n and AI, you eventually get that phone call, the one where a client sounds both confused and slightly...