Theodore Lowe, Ap #867-859 Sit Rd, Azusa New York

Theodore Lowe, Ap #867-859 Sit Rd, Azusa New York

Building an automation that uses AI is easy. Operating one is not. For past 1 year we watched real n8n workflows grow from promising demos into temperamental systems. Some aged well; most developed aches. The difference was never the cleverness of a prompt or a brand of model. It was whether we treated the workflow as a living system observed in the wild, shaped with explicit contracts, tested cheaply, and given guardrails for cost and failure.

What follows is a field guide derived entirely from that work. It’s written in the language of patterns rather than prescriptions. Each pattern names a recurring force, the failure it invites, the move that keeps the system honest, and the consequences you should expect. The tools are n8n’s; the habits are general.

The optimistic story begins the same way, “Let’s add an AI step to our n8n flow.” The canvas fills quickly, an IMAP trigger first, an HTTP Request later, a Code node to tidy the payload, an LLM step in the middle, Slack at the end. Everyone smiles during the demo.

Then, the system drifts.

An upstream team edits an email template, the subject grows longer, a vendor adds a field, the model becomes wordier. The once elegant flow stalls, or worse, succeeds while doing the wrong thing.

In that moment you learn the uncomfortable truth. This isn’t a script. It’s a small distributed system with coupling, feedback, latency, and cost. You don’t “finish” it; you shepherd it. The rest of this guide is about how to do that with n8n.

See how we applied the same principles in an AI-powered receptionist built entirely on n8n.

Most automations begin in the tool. Someone opens n8n, drags a node, and the solution takes shape before the problem does. When we lead with technology, we encode assumptions instead of reality. We automate a guess. Think like a detective, not an engineer. Before you touch the canvas, shadow the people who do the work for a day or two. Write their steps in plain English, resisting jargon. Find the handful of cases that cause most of the daily pain. Describe the ideal end state using time and latency, not features.

A concrete case will do: Sarah manages a support inbox. Most mornings she spends forty five minutes categorizing and routing messages. The desired world is simpler, fifteen minutes, with urgent emails visible to a human within thirty. That’s the whole brief. It’s enough.

Framing the result as a human improvement gives you a yardstick. Later, when a request arrives to add a novel branch or feed an extra API, you can ask whether it moves Sarah closer to fifteen minutes or further away. Many clever ideas don’t survive that question.

A blank n8n canvas encourages ambition. It also encourages wheel reinvention. Starting from scratch wastes energy and hides prior art that already solves sixty percent of your problem.

Borrow first, shape later. Search the n8n community templates with the exact language of your use case; scan the recent threads in communities where practitioners trade flows; watch a nearby YouTube tutorial. Copy the skeleton that gets you most of the way there and adapt it. Annotate your canvas with visible TODOs, you’ll remove them when the MVP breathes.

Beginning from a working skeleton shortens the slope to movement. You inherit proven node sequences and spend your effort on fit and finish, not invention for its own sake.

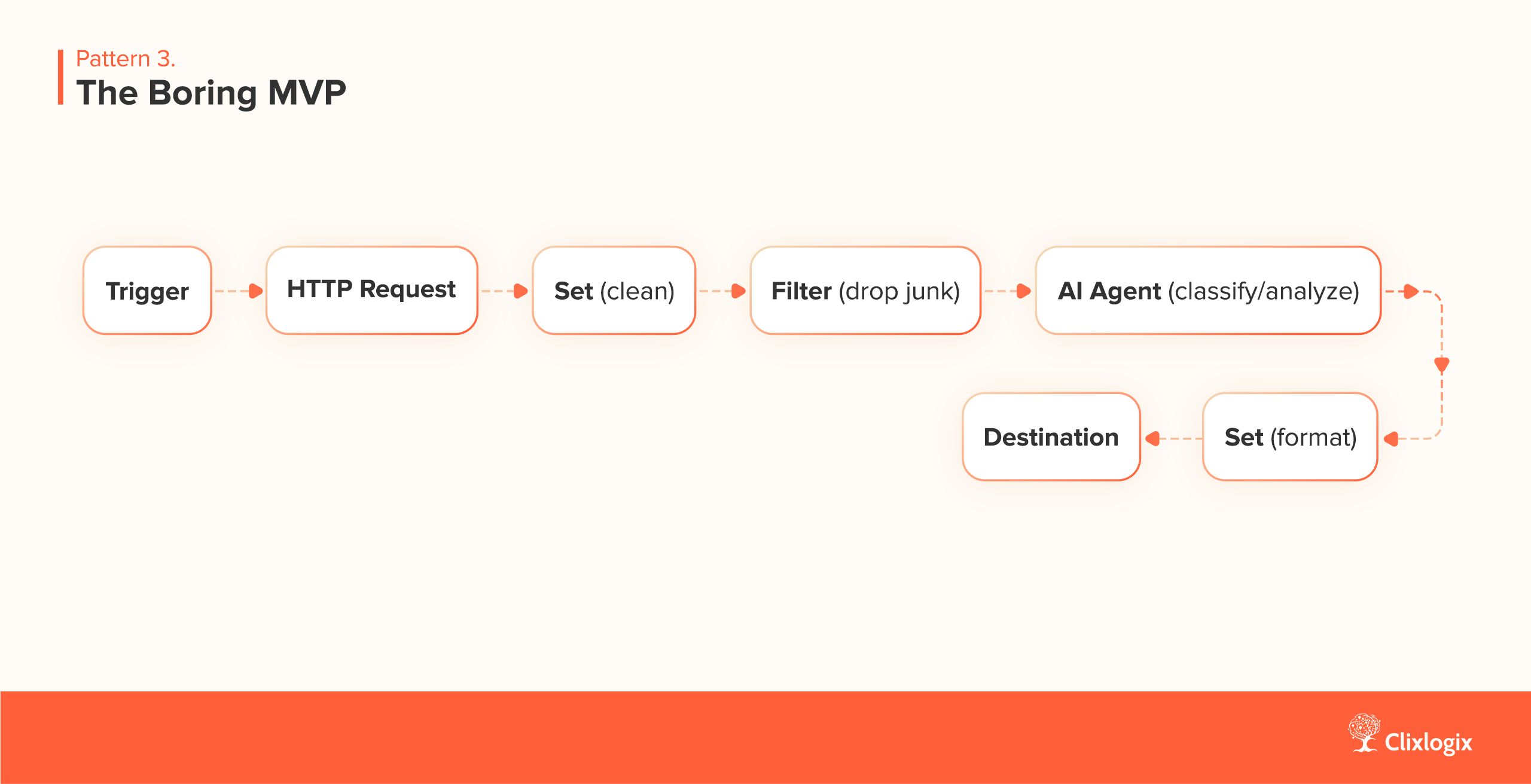

Early success tempts sophistication, branches for every scenario, loops for clever reuse, conditional webs that look smart but hide fragility. Complexity before stability makes failure cheaper to introduce and more expensive to diagnose.

Build the dullest version you can defend. Rely on the six nodes that carry most of the load, HTTP Request, Set / Edit Fields, Filter, Merge, IF, and an AI Agent/LLM chain. Wire them in a straight line so you can reason about every hop:

Validate API calls outside n8n first, this minimal first approach mirrors what we discussed in our previous blogpost re: OpenAI Agent Builder vs N8N, where shipping a working agent matters more than expanding its surface. Copy a cURL from the docs to Postman, test it with your parameters and your payloads, then transplant the working request into the HTTP node. Postman isn’t fashionable, but it reduces integration to a controlled exercise – adjust a header, rerun, observe; iterate until it works; only then bring the call onto the canvas.

When a small shape works, it becomes a surface for refinement. Until then, cleverness adds places to hide defects.

A “too simple” flow is a gift. When it fails, you can see where. When it succeeds, you know why. And later, as the surface area grows, you will miss this clarity.

Every automation eventually needs tiny transformations like trimming a string, pulling a number, collapsing a structure. Non-coders avoid the Code node even for simple shape changes; coders overuse it until the canvas becomes a disguised script.

Describe your input shape and desired output in plain language and let a small JavaScript snippet do just the formatting. Keep it stateless and focused on shape, not policy. Early on we use one that compresses a raw email into a compact summary:

const mapPriority = (s) => /urgent|asap|immediately/i.test(s) ? "high" : "normal";

return items.map(({ json }) => ({

json: {

sender: json.from || json.sender,

subject: json.subject?.slice(0,140) || "",

priority: mapPriority(`${json.subject} ${json.body}`),

summary: (json.body || "").slice(0,240)

}

}));

This is housekeeping. And it leaves the real logic visible on the canvas. By keeping transformation code small and intentional, you preserve n8n’s visual narrative while gaining the precision you need to make the next step predictable.

Iteration is expensive when every run hits an API or an AI model. A day of “just testing” can quietly cost real money. Live testing makes you choose between speed and thrift. It also makes failure nondeterministic – a remote system might change between attempts.

Pin node outputs as soon as you see real data. Run the flow once to capture an example; click the pin icon to lock it, then edit the pinned JSON to manufacture edge cases. Downstream nodes now run instantly and consistently, even when the upstream node would be slow or variable.

The result feels like a private laboratory. You can stress a branch with malformed inputs, lengthen a body to test a boundary, or drop a field to surface failures. You discover what breaks without paying per try. Pinning reduces anxiety. It also changes the social dynamic, you can answer “what happens when…” with a demonstration, not a theory.

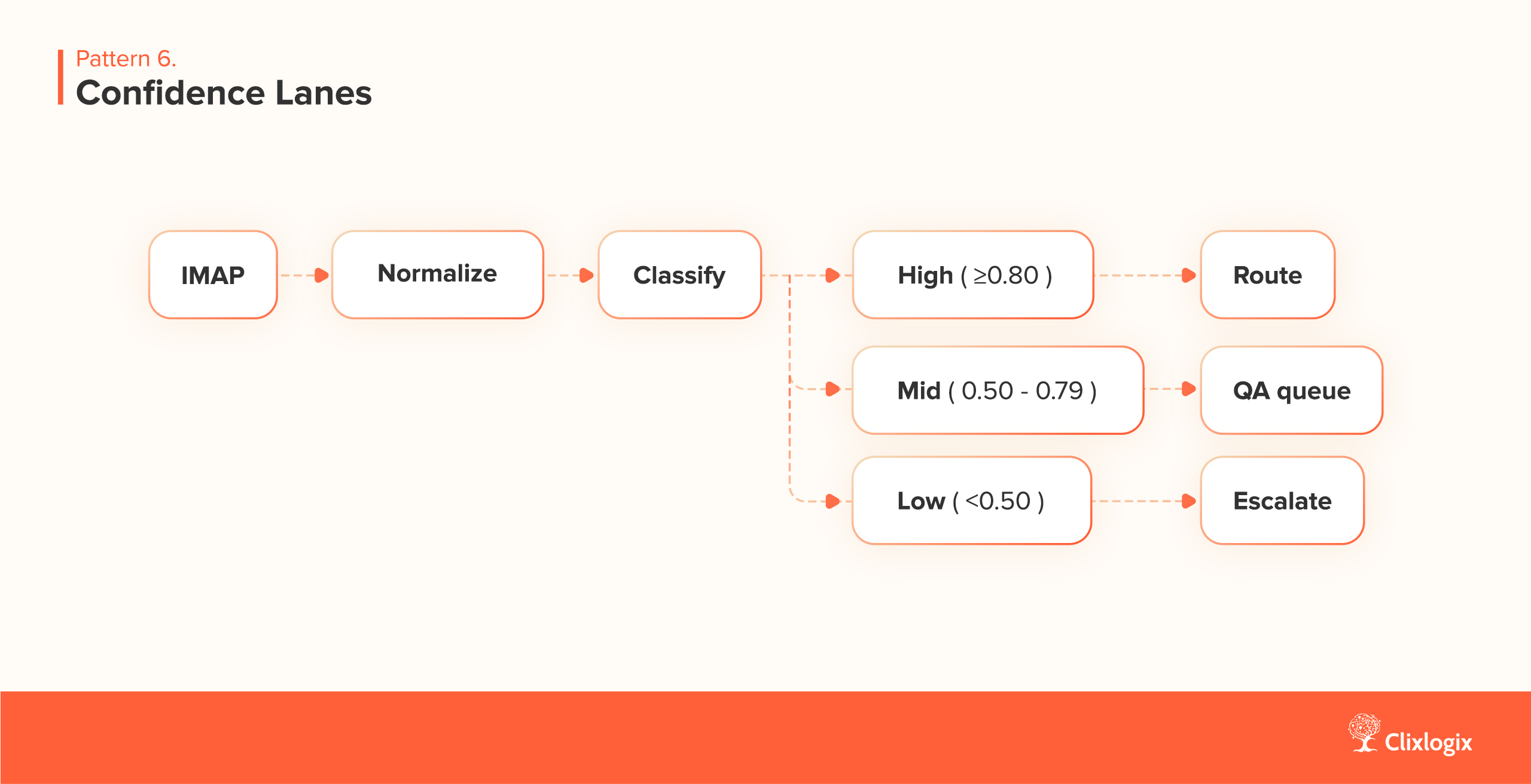

Pattern 6. Confidence Lanes

An AI step in the middle of a flow can do impressive work. It can also be wrong in interesting, confident ways. Treating every model output as equally trustworthy yields silent damage. misrouted messages, wrong tags, noisy alerts.

Split behavior by explicit confidence. When the classifier returns a score, set three lanes, high confidence continues without ceremony, mid confidence asks a human, low confidence escalates with context and stops.

Up close, the mid lane is a pragmatic compromise. It slows the system and raises trust. Reviewers see the borderline cases, approve or correct, and improve the next revision of the prompt or schema. Over time the mid lane shrinks; the high lane grows.

Throughput dips where humans are involved, but the workflow earns credibility. Stakeholders trust systems that hesitate when uncertain more than ones that charge ahead.

Networks fail, payloads drift, timeouts happen. The question is not if, but how they appear. If failure paths are ad-hoc, each incident becomes a bespoke rescue.

Design failure as a first class path. Build a small sub workflow that receives error context and does three things consistently –

The inputs should include a workflow name, execution ID, node, error message, timestamps, and, if you used an AI step, token usage.

A minimal retry loop is enough to surface intent:

async function withRetry(fn, tries=3, base=500) {

let last;

for (let i=0;i<tries;i++) {

try { return await fn(); }

catch (e) { last = e; await new Promise(r => setTimeout(r, base * 2**i)); }

}

throw last;

}

You’ll also want to log successes. A daily note, “Your automation processed 47 leads today”, does more than flatter. It proves the system works when nothing is broken, which is when most systems are ignored. With failure as an intentional route, incidents become data instead of drama. Teams can talk about mean time to recovery which is better than best guess fixes.

Scariest failures aren’t always logical. They’re financial. An overnight loop with a verbose model can produce a bill no one expected. Invisible cost erodes trust and, if repeated, ends projects.

See our Cost Optimization Guide for n8n AI Workflows to Run 30x Cheaper

Wrap the AI step with a small cost calculation. Record the model name and how many tokens it consumed; multiply by your known rates; add the result to the item’s JSON; and roll up a daily estimate. Send a small digest that says what you processed, what failed, and what you spent.

The psychology matters. People relax when costs are seen and bounded. They also become willing to trade prompt verbosity for a smaller model where it doesn’t hurt. Guardrails encourage good taste. A visible budget nudges design toward restraint: compact prompts, lighter models for non-critical steps, heavy jobs scheduled off peak. The system feels guided rather than hopeful.

As victories accumulate, duplication creeps in. You see the same “clean this payload” code in three flows, the same Slack formatter in four. Copy-pasted logic ages at different speeds. Fixes land in one place and not another.

Extract stable behavior into small sub-workflows—LEGO blocks you can trust. Give each a contract, a clear input shape, a clear output shape, and an explicit failure mode. Common blocks emerge quickly – Data Cleaning, Error Handler, AI Classifier (with a consistent

{ label, confidence, rationale }

shape), Output Formatter. Call them with Execute Workflow and wait for a return value so the contract stays honest.

Reuse accelerates iteration and reduces noise. It also invites a shared language. Teams learn to say “send it through the cleaner” instead of “trim those fields again,” and the phrase refers to a tested component, not a hope.

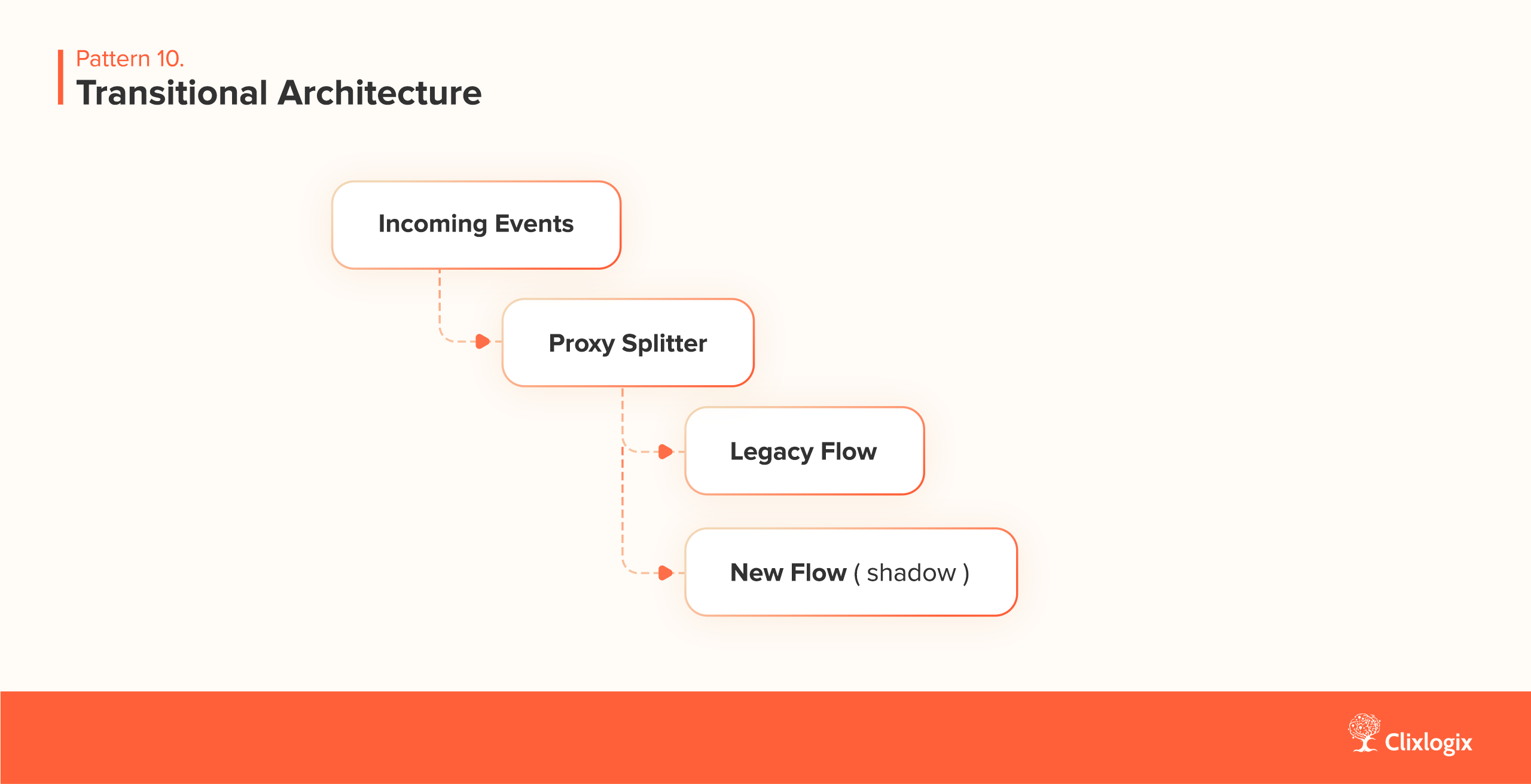

Nothing is as permanent as a temporary flow that works. Eventually you must change it. Big rewrites carry big risks. A cutover that seems neat in a meeting often stumbles under traffic.

Apply a strangler style migration. Put a small proxy at the front of the legacy flow, let it fork traffic. Send most events to the old path, some to the new. Compare outputs for a while. When parity is good and incidents are low, bias the split toward the new. Retire the old when usage falls and confidence rises.

The proxy is scaffolding. Remove it when the structure stands. Transitional elements slow the story in exchange for safety. They also produce clean measurements of parity and lag useful numbers for decision making instead of hunches.

As more builders touch the canvas, governance by edict breaks down. Central approval doesn’t scale, chaos doesn’t help. Without a way to talk about changes, each team optimizes locally and the system drifts.

Replace bureaucracy with dialogue. Ask for small notes before altering shared flows: intent, context, contract changes, rollout plan. Meet briefly to show a failure and its fix. Add lightweight “fitness functions” that establish guardrails does the classifier always return ‘label’ and ‘confidence’? Is daily AI spend under a set threshold? Are payloads masked for PII in logs?

The organization gains coherence without stifling autonomy. Architecture becomes something people practice together, not something a diagram asserts.

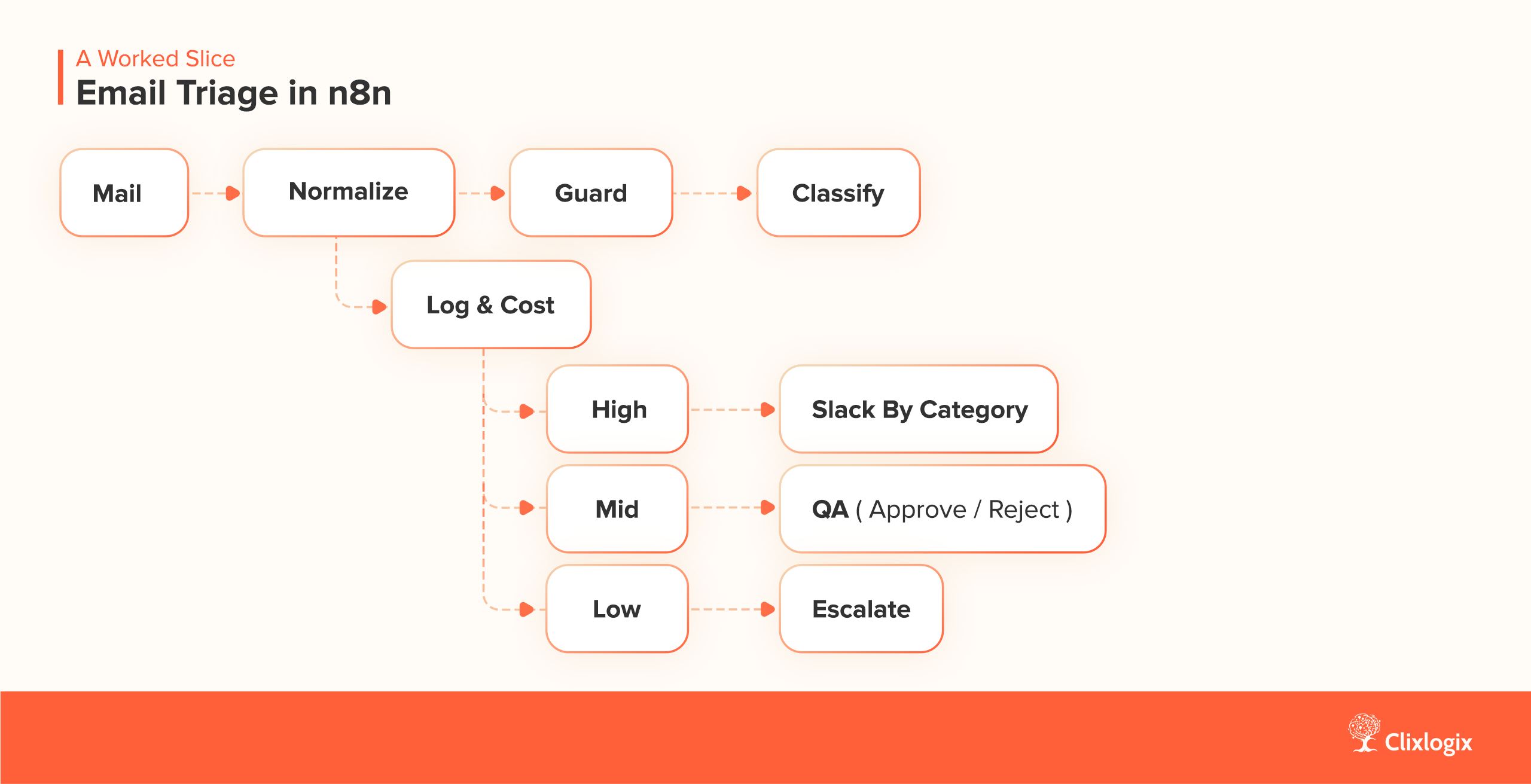

To make the patterns concrete, consider Sarah’s triage problem. Morning triage drops from forty-five minutes to fifteen, urgent emails reach eyes within thirty. The flow begins with an IMAP trigger. A Set node normalizes the payload into

{ sender, subject, body, receivedAt }

A guard throws early if any of those fields are missing. An AI step classifies the message into a category and returns an urgency with a confidence score. Then the confidence lanes decide what to do next, high confidence routes directly to the right Slack channel, mid confidence posts into a QA channel with approve/reject, low confidence raises an escalation ticket with the message attached.

An Execute Workflow call writes a log entry that includes a sanitized snapshot of the inputs and the estimated AI cost.

The first day mirrors ten percent of traffic to the new path and compares lag and routing accuracy. A few edits follow, the prompt becomes smaller, the mid-lane threshold nudges up. By the end of the week the split shifts to fifty percent, then to all traffic. Sarah’s morning drops below fifteen minutes. Urgent mail seldom waits.

Nothing here is novel. It’s a string of ordinary beats executed deliberately, normalized inputs, explicit contracts, confidence lanes, cheap tests, a daily note.

An AI score is interesting. An adoption number is decisive. When Sarah’s morning triage falls to fifteen minutes and urgent mail surfaces within thirty, people stop asking which model did it. That is the right order of concern. Measure time saved per person per day. Measure the percentage of items that fall into the mid lane and whether it shrinks. Watch false escalations, not just errors. Count dollars per processed item and share the small total.

If these numbers move the right way, the system is healthy.

If they don’t, change the system.

All the pieces in this guide come from the same root – start with reality, not the tool, shape a small thing you can explain, test cheaply, separate certainty from guesswork, treat errors and costs as design, not accidents, evolve in place rather than swapping engines mid-flight.

In other words, think like a workflow detective with technical skills, not an AI engineer in search of a canvas.

N8n gives us generous primitives. The habits you bring decide whether they accumulate into a dependable system or a fragile demo. If you adopt only one practice, pin the data and write the contracts. It changes everything that follows.

And if you adopt them all, you’ll notice something calmer – your flows do more than running, they age gracefully. They absorb change. They tell you when they’re uncertain. They cost what you expect. People begin to rely on them without thinking about them.

That’s the best compliment an automation can receive.

As CEO of Clixlogix, Pushker helps companies turn messy operations into scalable systems with mobile apps, Zoho, and AI agents. He writes about growth, automation, and the playbooks that actually work.