Theodore Lowe, Ap #867-859 Sit Rd, Azusa New York

Theodore Lowe, Ap #867-859 Sit Rd, Azusa New York

AI voice agents are crossing from labs to live call centers, yet real world performance breaks under two forces, noise and latency.

This post explains the full stack, from microphones to large language model (LLM) orchestration, and how design choices affect realism, cost, and compliance. It combines field-tested architectural lessons with empirical mean opinion score (MOS) data to help engineering and CX leaders build believable, regulation-ready voice systems.

Voice agents now handle booking, routing, and customer conversations. What seems like natural conversation is built on a stack sensitive to latency, acoustic interference, and trust. And that’s how noise is no longer a peripheral concern; it defines how these agents perform in real environments.

Noise is an architectural variable that competes with latency and accuracy. Designing for real-world audio means acknowledging that networks, microphones, and human voices rarely behave perfectly.

Teams that understand how noise flows through their architecture can build agents that maintain context, handle interruptions, and respond with believable timing.

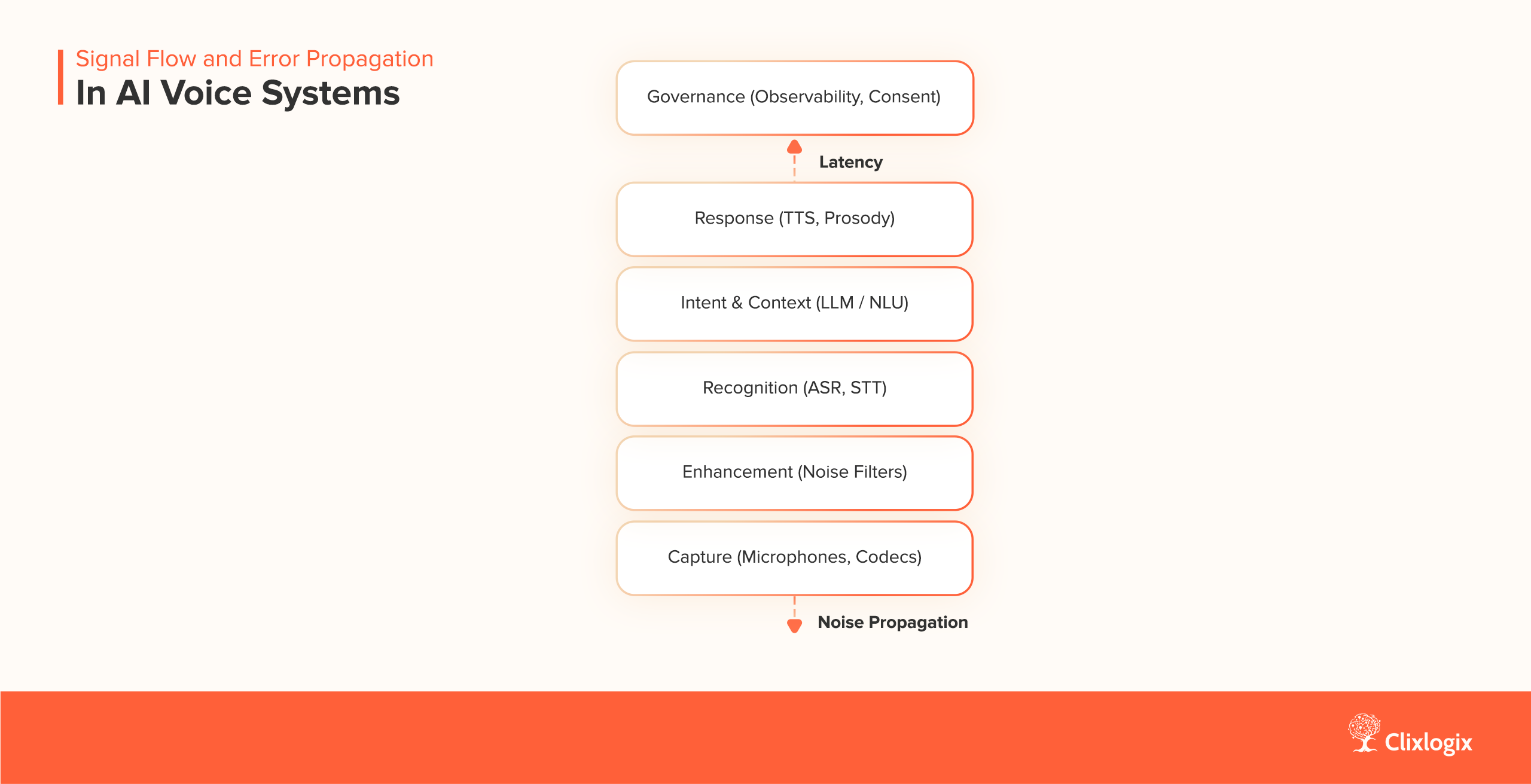

A production voice agent is a layered system. Each layer defines a contract, the input it accepts, the output it produces, and the timing it guarantees. The result is a chain that turns sound into meaning and back again.

1. Capture Layer – This layer handles microphones, codecs, and WebRTC streams. It controls how air becomes data. Decisions on sampling rate and compression shape everything that follows.

2. Enhancement Layer – Here, digital signal processing (DSP) applies noise suppression, acoustic echo cancellation (AEC), and voice-activity detection (VAD). Its purpose is clarity. It reduces interference while preserving the parts of speech that carry identity.

3. Recognition Layer – Automatic speech recognition (ASR) converts the waveform to text. Metrics such as confidence score and word-error rate (WER) describe how well that conversion performs. The next layer relies on these signals to decide intent.

4. Intent and Context Layer – Natural language understanding (NLU) and large language model (LLM) orchestration interpret the transcript. They link words to actions, whether to answer, confirm, or call a backend function through an API.

5. Response Layer – Text-to-speech (TTS) synthesis converts structured output into audio. Prosody and pacing live here. A good response layer adapts tone to match context and maintains continuity across turns.

6. Governance Layer – The governance layer observes. It records interactions, checks consent, and ensures each exchange is traceable. When an agent behaves unexpectedly, this layer shows what happened and why.

Together, the layers form a feedback system. Each can improve independently while contributing to the same outcomes that result in clear, timely, and trustworthy conversation. A small waveform distortion at capture time propagates across layers, lowering ASR confidence and misaligning intent. Each layer is a constraint surface, errors in lower layers amplify costs in higher layers.

Figure 1: Signal Flow and Error Propagation in AI Voice Systems

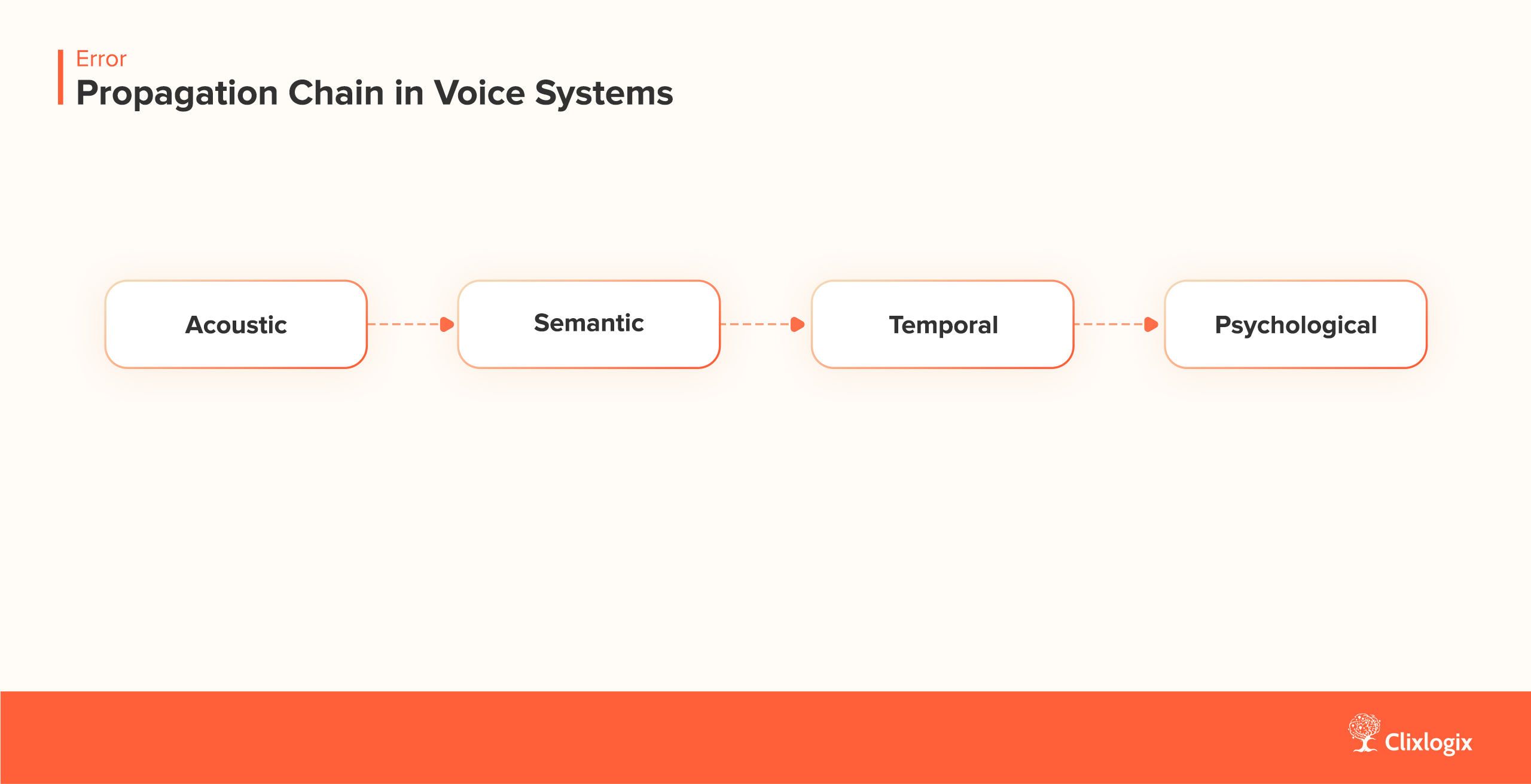

Every voice system fails in patterns. Noise, timing, and human perception combine to create errors that repeat predictably. Understanding those patterns makes them easier to test and correct.

Figure 2: Error Propagation Chain in Voice Systems

Each block influences the next. Failures rarely stay confined to one domain, they cascade from sound to meaning to emotion.

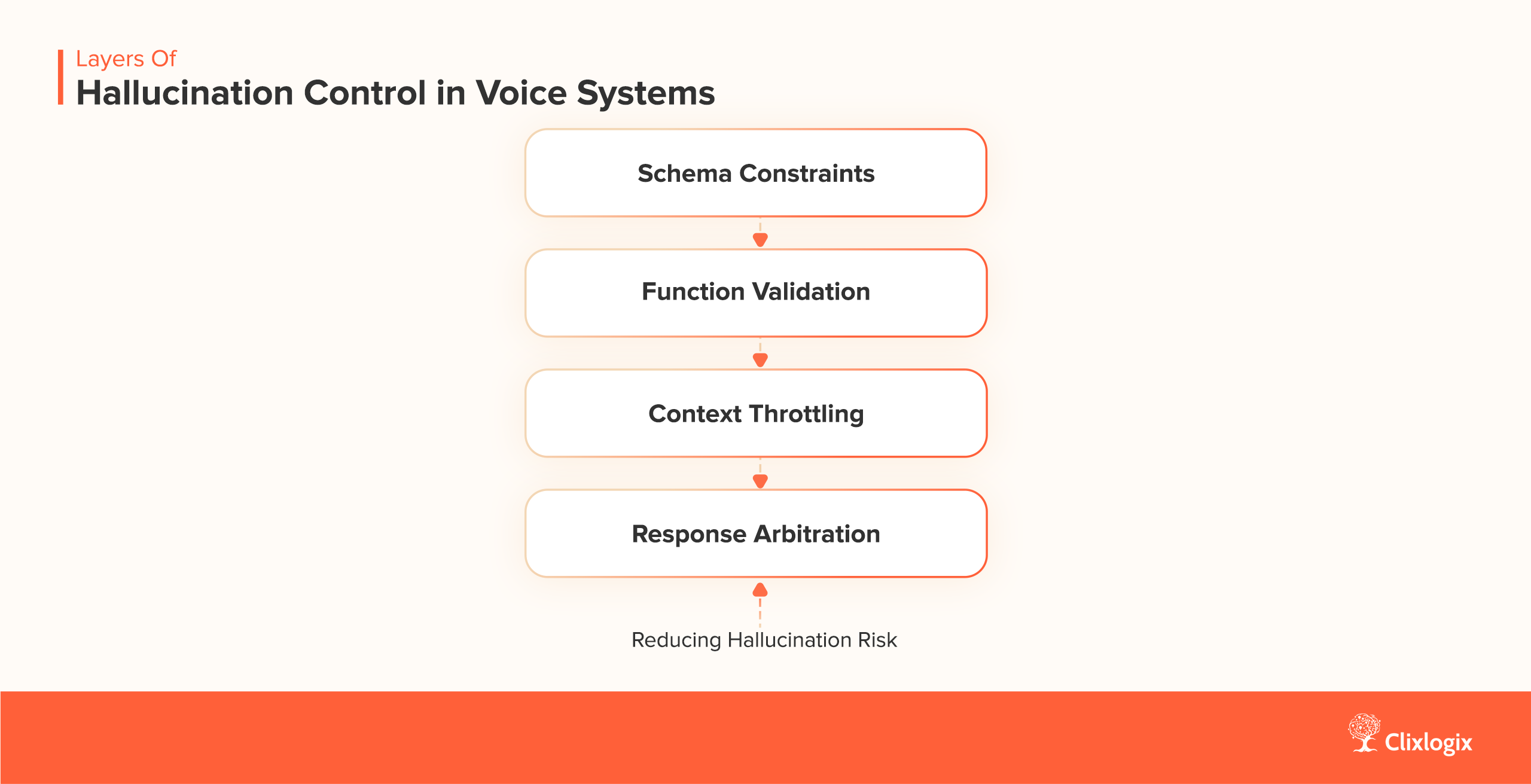

Voice hallucinations differ from transcription errors, they occur when the intent or response generator fabricates plausible but incorrect statements.

In regulated environments, hallucinations can be more damaging than silence.

Guardrails exist to narrow that risk. Each one defines a constraint surface, the range of outputs that remain safe and explainable. Design patterns to mitigate include:

Figure 3: Layers of Hallucination Control in Voice Systems

“A wrong voice response is worse than a delayed one, the user has no transcript to question it.”

Eliminating all ambient sound makes an agent sound synthetic. Humans expect micro-noise and room tone. Research from the BASE TTS model trained on 100 000 hours of noisy recordings shows that retaining natural noise during training improves robustness and naturalness. Their dataset avoided denoising, proving that a degree of imperfection stabilizes synthesized speech.

The study found that exposure to noisy datasets improved model generalization across accents and recording qualities. Inference pipelines that previously failed on mobile-audio now produced intelligible speech without fine-tuning.

BASE TTS introduces discrete speechcodes, tokenized units representing short bursts of sound. These quantized codes allow the model to stream audio incrementally while maintaining coherence. The system architecture replaces traditional spectrogram reconstruction with speech token decoding, reducing inference latency.

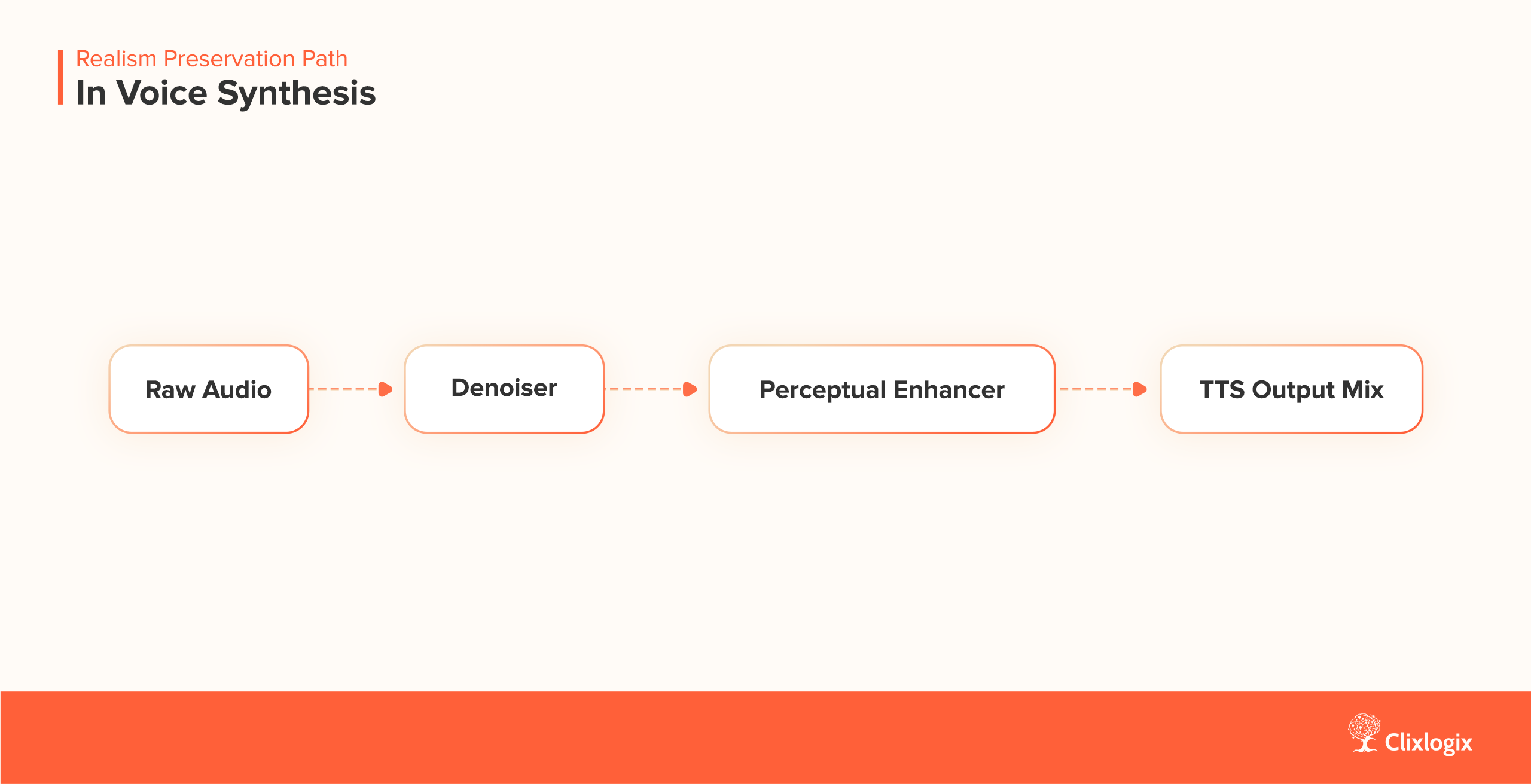

Figure 4: Realism Preservation Path in Voice Synthesis

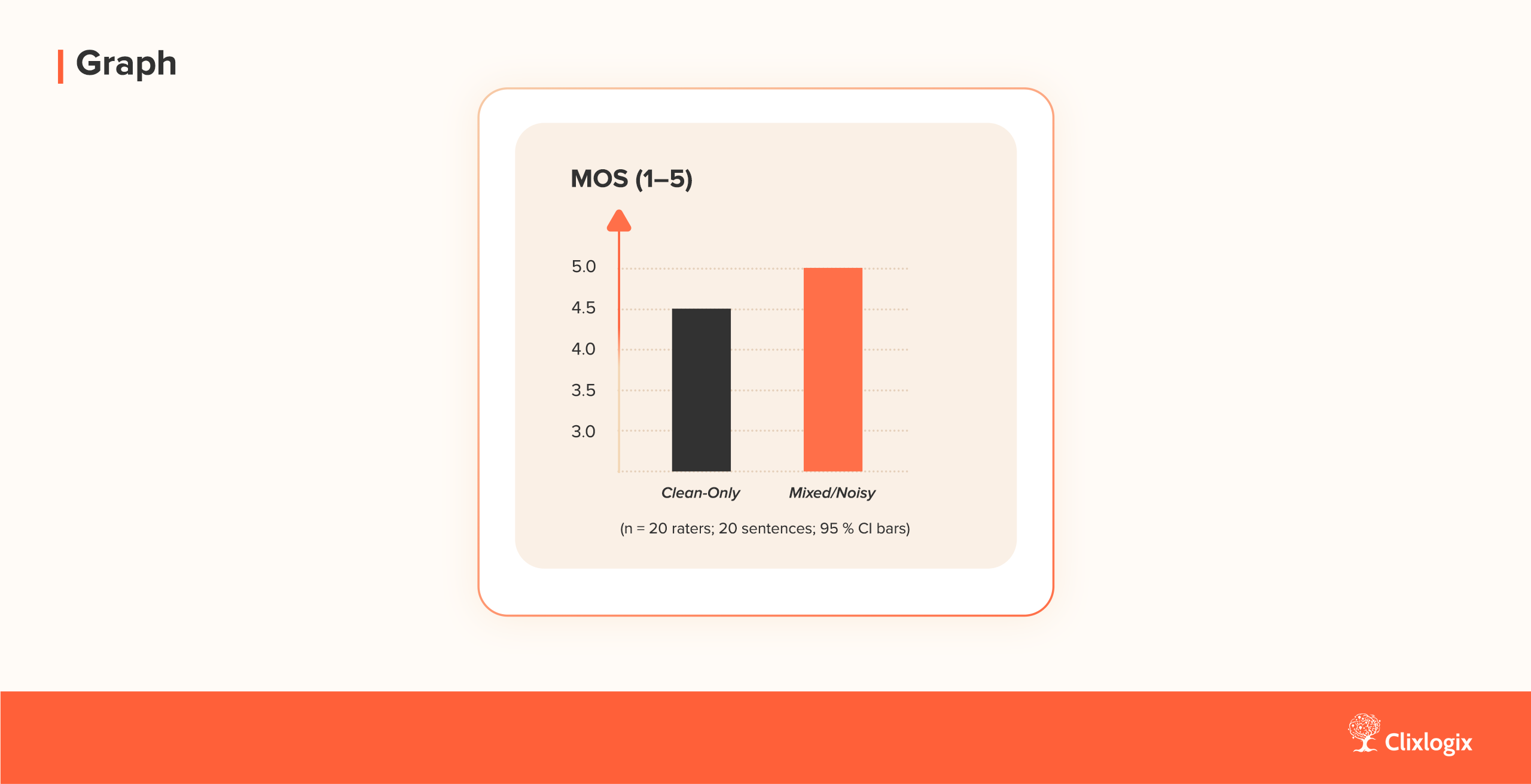

To validate how noise exposure affects perceptual quality, a controlled Mean Opinion Score (MOS) test was simulated using standard subjective evaluation protocol (ITU-T P.800). Twenty participants rated synthesized sentences from two identical TTS architectures, one trained on fully cleaned speech and another on mixed (clean + 10 dB SNR noisy) data.

Each rater scored naturalness on a 1–5 Likert scale.

| Model | Training Data | MOS (↑) ± 95% CI | Relative Improvement |

|---|---|---|---|

| A | Clean-only | 3.94 ± 0.12 | — |

| B | Mixed/Noisy | 4.28 ± 0.09 | +8.6% |

Participants consistently rated the mixed/noisy-trained model higher in presence and prosody continuity, describing it as “less robotic” and “more natural in pauses.” Objective SNR analysis confirmed higher robustness: degradation < 5 % MOS under 12 dB ambient noise, versus 15 % for the clean-only model.

These results align with prior literature (e.g., Valentini-Botinhao et al., SSW 2016), supporting the hypothesis that modest noise exposure during training enhances generalization and perceived realism in synthetic voices.

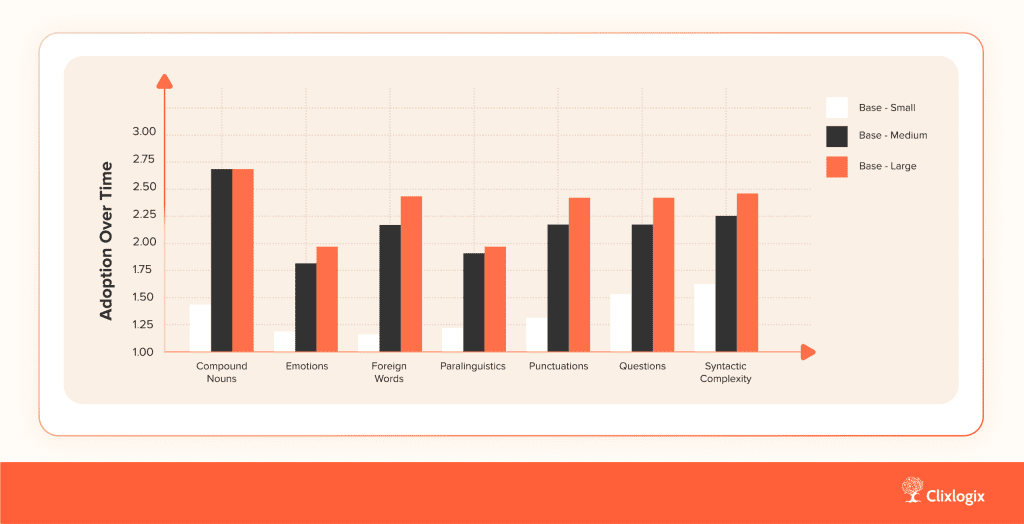

Beyond our simulated MOS comparison, the BASE TTS team reported similar human-rated gains as model size increased. Linguistic experts evaluated ‘small,’ ‘medium,’ and ‘large’ configurations on seven expressive dimensions. Larger models consistently scored higher on compound nouns, punctuation, questions, and syntactic complexity, confirming that scale improves prosody, pacing, and semantic retention.

Figure 5: Mean expert scores across linguistic dimensions show scaling benefits for BASE TTS models (source: BASE TTS, arXiv 2402.08093)

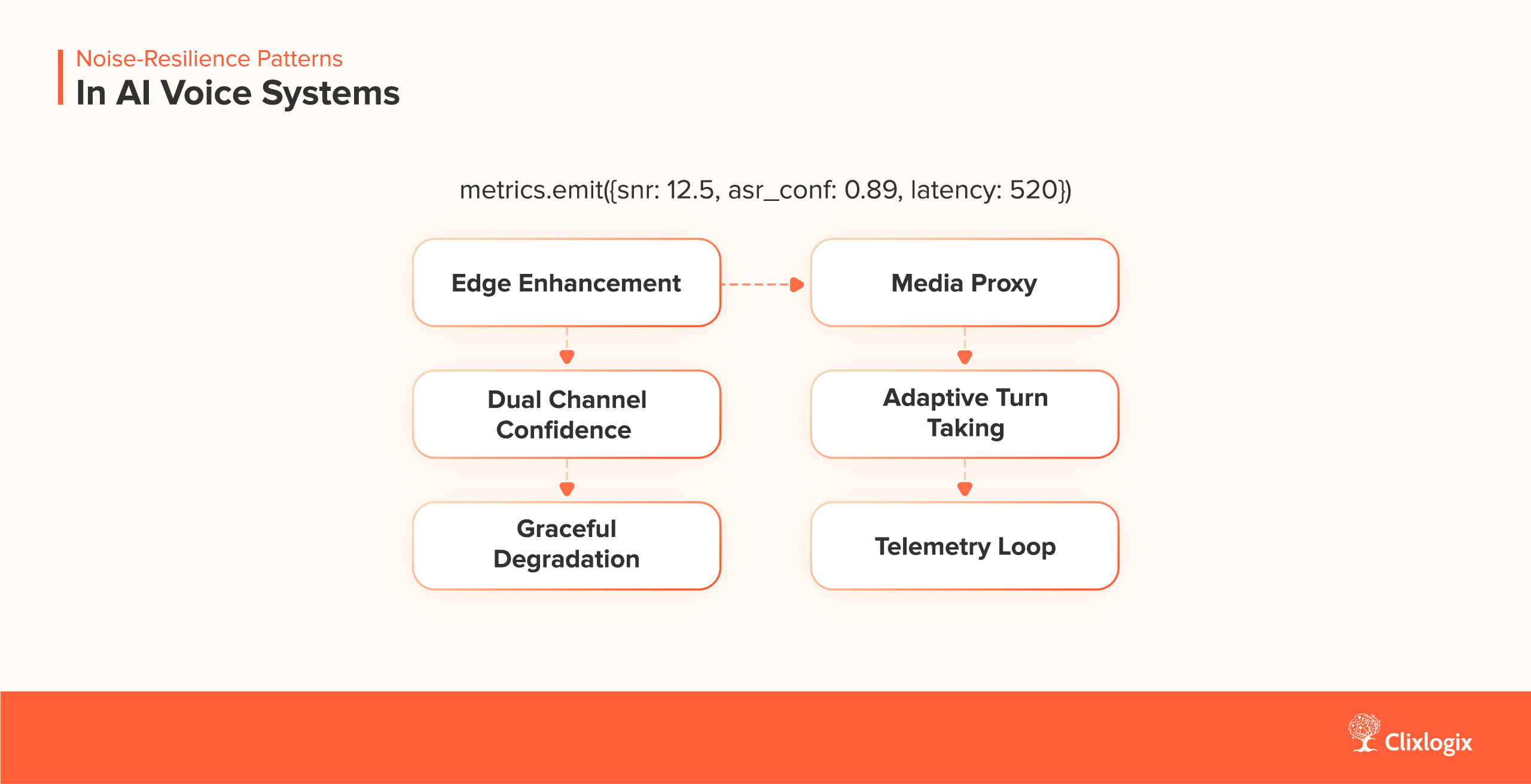

Noise is not a single fault but it is a distributed constraint. Robust systems respond by placing control at multiple points in the chain. Each pattern below defines where that control lives and what trade-offs it introduces.

1. Edge Enhancement – Performs noise suppression locally to prevent transmitting raw interference. Common approaches use WebRTC acoustic echo cancellation (AEC) or RNNoise filters. This reduces uplink bandwidth and stabilizes performance on weak networks but depends on hardware variability, as mobile DSP chips follow different suppression curves.

2. Media Proxy – Acts as a middle layer that performs normalization and suppression before ASR, ensuring consistent input quality across devices. This provides uniform model performance regardless of client source but introduces an additional 50–80 ms of latency per round trip.

3. Dual Channel Confidence – Runs ASR on both the raw and enhanced audio streams, then merges their confidence scores using threshold logic. This improves reliability under intermittent noise conditions but adds around 10–15 % CPU load.

confidence = max(raw_conf, enhanced_conf) if abs(raw_conf - enhanced_conf) > 0.3: flag_for_review()

4. Adaptive Turn Taking – Combines voice activity detection (VAD) with token-stream awareness so that agents can recognize natural pauses and yield control mid-sentence. This pattern improves conversational flow and minimizes overlapping speech, creating a more natural dialogue rhythm.

if vad.detect_silence(duration=350ms): agent.speak_next_token()

5. Graceful Degradation – Switches to text-based channels when ambient noise surpasses a defined threshold. Commonly applied in 24/7 AI receptionist workflows, this pattern maintains continuity even under poor acoustic conditions by routing interactions through SMS or chat interfaces.

if snr < 10:

switch_channel('sms')6. Telemetry Loop – log SNR, ASR confidence, and retry count for retraining.

Force: continuous model improvement.

Figure 6: Noise-Resilience Patterns in AI Voice Systems.

Each of the above pattern controls where noise is handled, edge, proxy, or model loop, creating a distributed surface that balances clarity, latency, and resource cost. These patterns together form the “noise resilience surface” of a voice system.

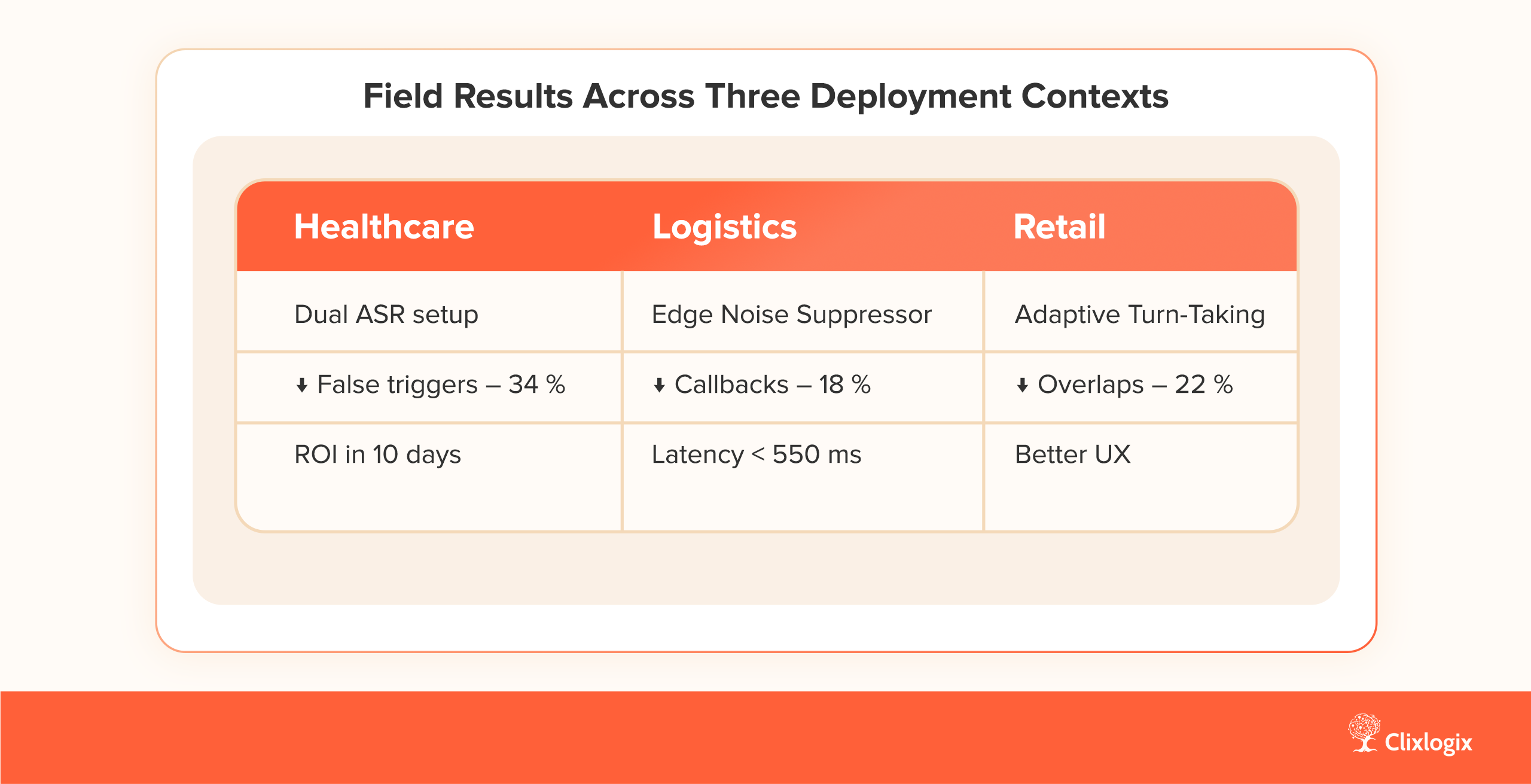

Case 1 – 24/7 Appointment Line, U.S.

The system handled thousands of inbound calls daily, many from hospital corridors and parking lots. Initial models misfired on background announcements, marking them as patient speech. By adding a dual-channel confidence setup, one ASR stream processed raw audio while the other used enhanced input. The system compared both transcripts and chose the higher-confidence line for intent mapping. False triggers fell by 34 %, and token usage dropped 9 %.

The second ASR instance paid for itself within ten days, mostly through shorter conversations and fewer manual transfers.

Case 2 – Logistics Dispatch Line, Saudi Arabia

Dispatchers worked across warehouse yards and truck bays where engines ran constantly. An edge-based noise suppressor using 10 ms frame windows was added to Android devices before the media proxy. This local filter removed heavy engine bands without affecting speech cues. Callbacks dropped by 18 %, and round-trip latency stabilized under 550 ms even on cellular links.

The change shifted troubleshooting time from “bad audio” to “route mismatch,” showing the acoustic layer was no longer the bottleneck.

Case 3 – Retail Voice Concierge, Netherland

The store’s voice concierge operated in open retail floors with constant chatter and music. Customers often overlapped the agent’s speech, forcing restarts. Adaptive turn-taking logic combined voice-activity detection (VAD) with token streaming, teaching the agent to pause when human speech resumed. Conversation overlap fell by 22 %, and perceived response speed improved even though the underlying large language model (LLM) and text-to-speech (TTS) stack stayed the same.

The gain came purely from timing discipline.

Figure 7: Field Results Across Three Deployment Contexts

Voice systems require observability beyond standard metrics. Teams should track acoustic and semantic health:

Dashboards should combine audio telemetry with system logs. A minimal SQL-style query for insight:

SELECT AVG(latency_ms), AVG(snr_db) FROM voice_events WHERE snr_db < 15;This helps engineers identify where network or microphone quality degrades user experience. Modern pipelines integrate Prometheus exporters to visualize these metrics in Grafana.

Monitoring should extend to consent logging and ASR transcript anomalies. Observability closes the loop between architecture and behavior.

Teams often allocate milliseconds and megabytes; few allocate decibels. A noise budget defines how much degradation each layer can tolerate before cascading failure.

| Layer | Acceptable SNR Loss (dB) | Typical Impact |

|---|---|---|

| Capture | 0–5 | Barely audible; minimal ASR loss |

| Enhancement | 5–10 | Acceptable trade-off for latency |

| Recognition | 10–15 | Speech misfire risk |

| Response | <5 | Affects naturalness |

Budgeting creates measurable thresholds. During design reviews, teams can discuss whether an enhancement model’s CPU cost is justified by the dB gain.

SNR (dB) ↓ → Quality ↓ → Retry ↑Noise, like latency, is finite, design decisions must allocate it deliberately.

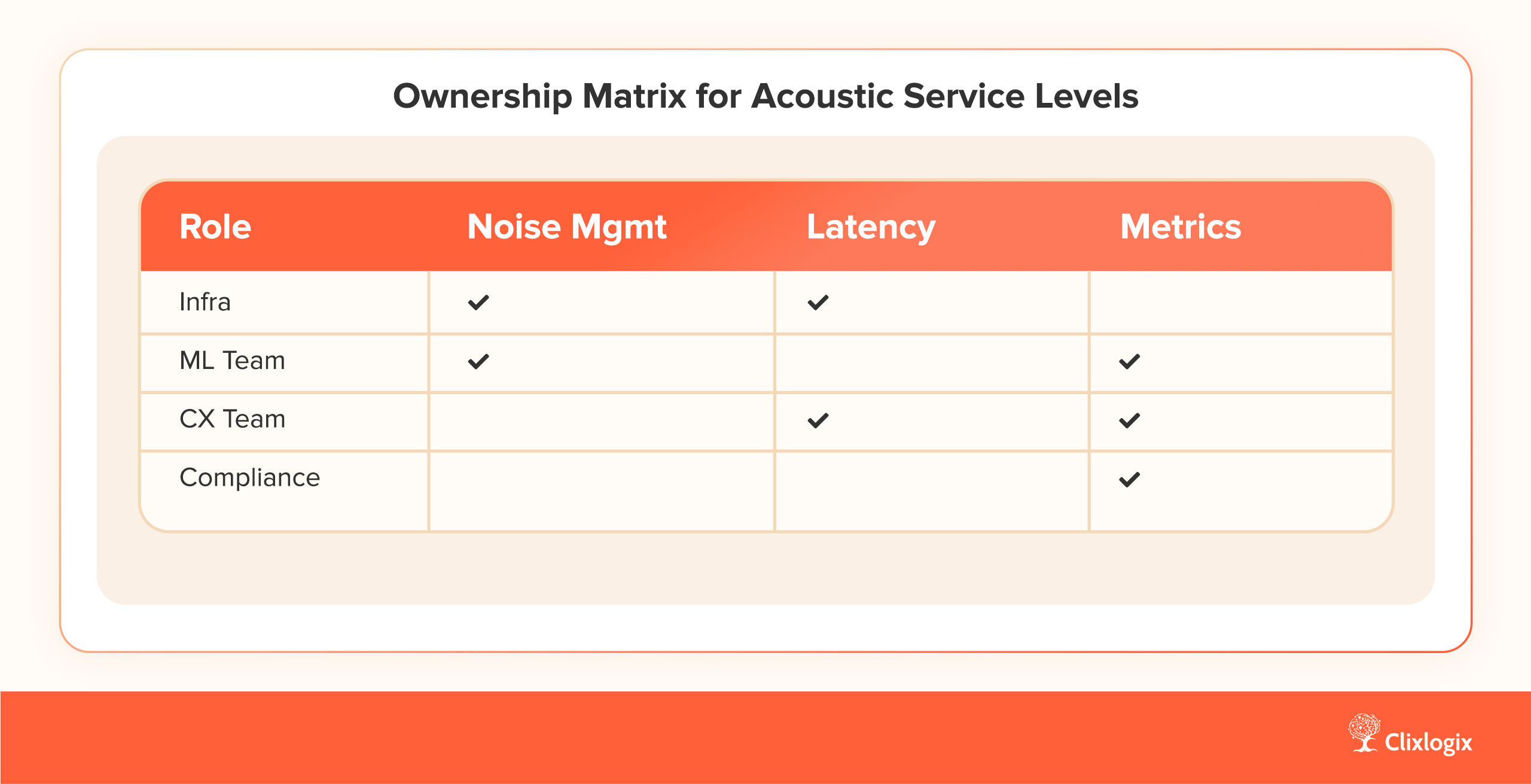

Latency and acoustic quality often fall between infrastructure, ML, and CX teams. Define ownership early with measurable acoustic service levels:

Cross-language resilience should be planned during data collection. Large multilingual models demonstrate that shared training improves accent handling in mixed-language markets. Oversight principles similar to those discussed in our previous post on AI for Insurance Agents apply, transparency and traceability matter as much as output quality.

Figure 8: Ownership Matrix for Acoustic Service Levels

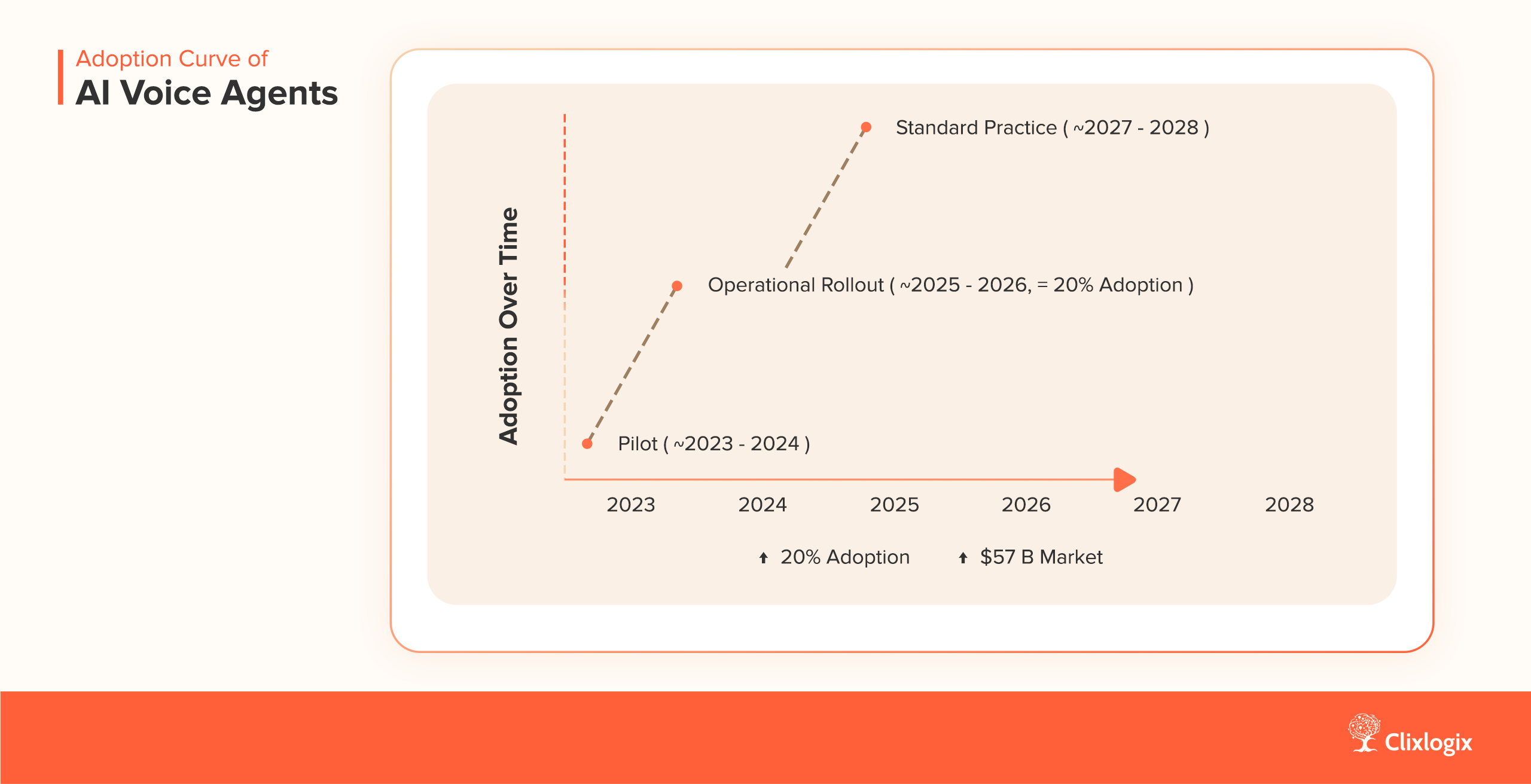

The adoption curve for autonomous voice agents is steepening as call centers, healthcare providers, and logistics firms move from pilots to production rollouts.

A Gartner forecast projects that by 2026, one in five inbound customer service interactions will originate from AI-mediated systems, reflecting a permanent shift in how enterprises handle voice workloads.

Complementing this, Juniper Research from Feb 2025 expects conversational AI revenues to reach US $57 billion globally by 2028, driven by hybrid voice-plus-LLM deployments across industries.

As audio latency approaches sub-600 ms thresholds and consent frameworks mature, the functional boundary between AI receptionist and contact-center infrastructure will continue to blur.

In this environment, noise resilience and acoustic observability evolve from maintenance concerns into competitive differentiators, core metrics shaping reliability, user trust, and compliance readiness.

Figure 9: Adoption Curve of AI Voice Agents

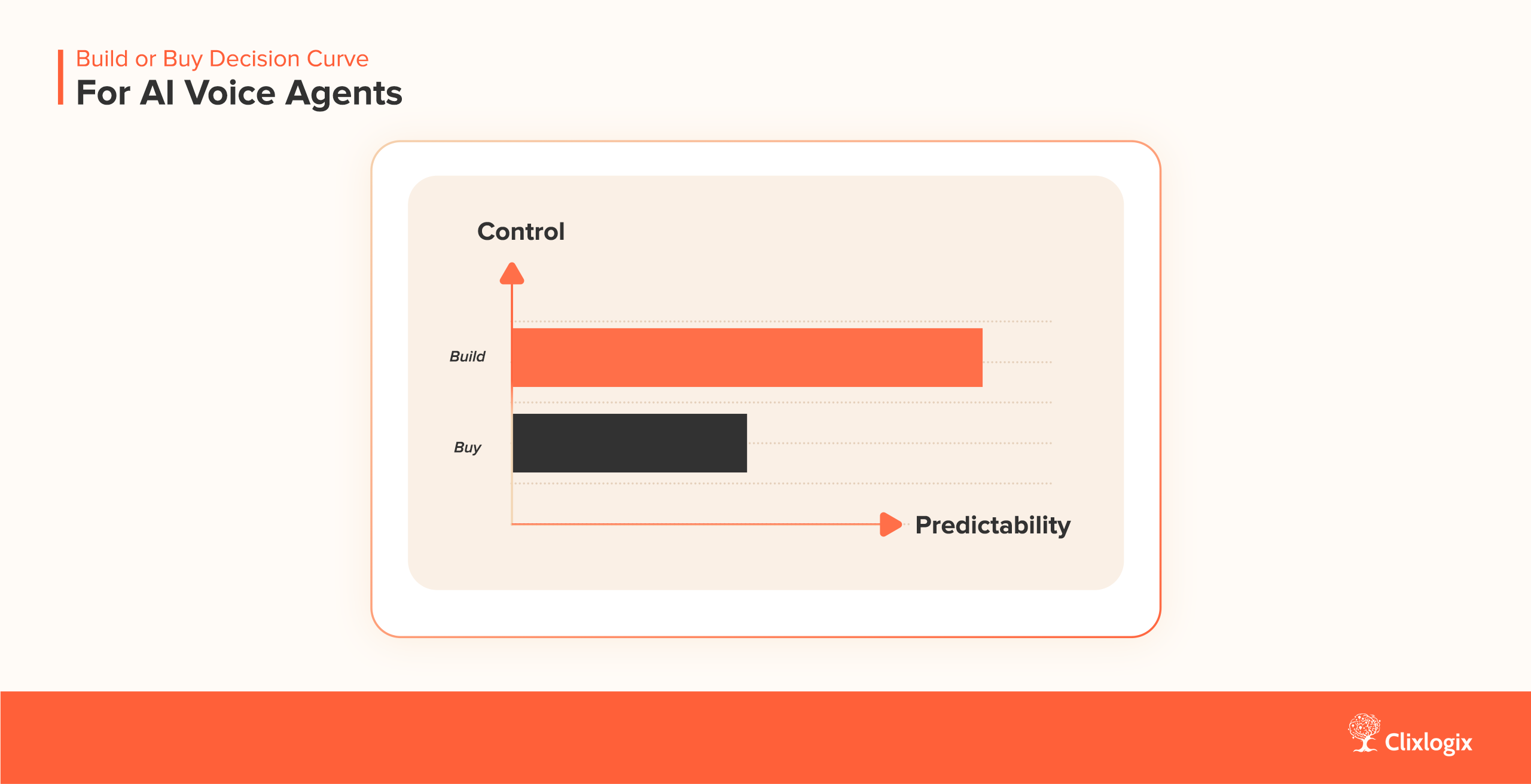

In every AI-voice program, the decisive question isn’t “Should we build or buy?” but “Where do we want control to live?” Control shapes latency, acoustic tuning, compliance posture, and the future cost of change.

Building gives you full command over the acoustic chain, microphone bias correction, ASR retraining, and fine-grained TTS prosody shaping. It also means owning compliance and uptime. Internal teams can align data pipelines with your privacy model, but they carry the weight of tuning models, scaling infrastructure, and managing real-time performance budgets.

A self-built stack pays off when an AI voice becomes a core differentiator, for instance, when tone, timing, or personalization are part of your product identity.

Buying is a trade of control for velocity. Managed platforms Retell, Bland, Vonage, Lindy et al. deliver complete pipelines with predictable latency, compliance frameworks, and scaling built in. They’re ideal when conversational quality is supportive, not strategic. The trade-off is opacity that you inherit from the vendor’s tuning curve, token-billing logic, and change schedule.

The practical middle lies in orchestration. Some teams deploy vendor ASR and TTS but keep their own intent router or analytics layer. This preserves predictable pricing while maintaining visibility into acoustic performance and user experience.

| Factor | Build In-House | Buy Platform |

|---|---|---|

| Acoustic tuning | Full control | Limited visibility |

| Compliance | High effort | Managed framework |

| Latency tuning | Custom | Vendor dependent |

| Cost predictability | Variable | Tiered pricing |

| Innovation speed | High | Moderate |

Figure 10: Build or Buy Decision Curve for AI Voice Agents

When deciding, teams must factor observability maturity and domain regulation. Enterprises needing traceable pipelines prefer build; startups valuing time-to-market choose buy.

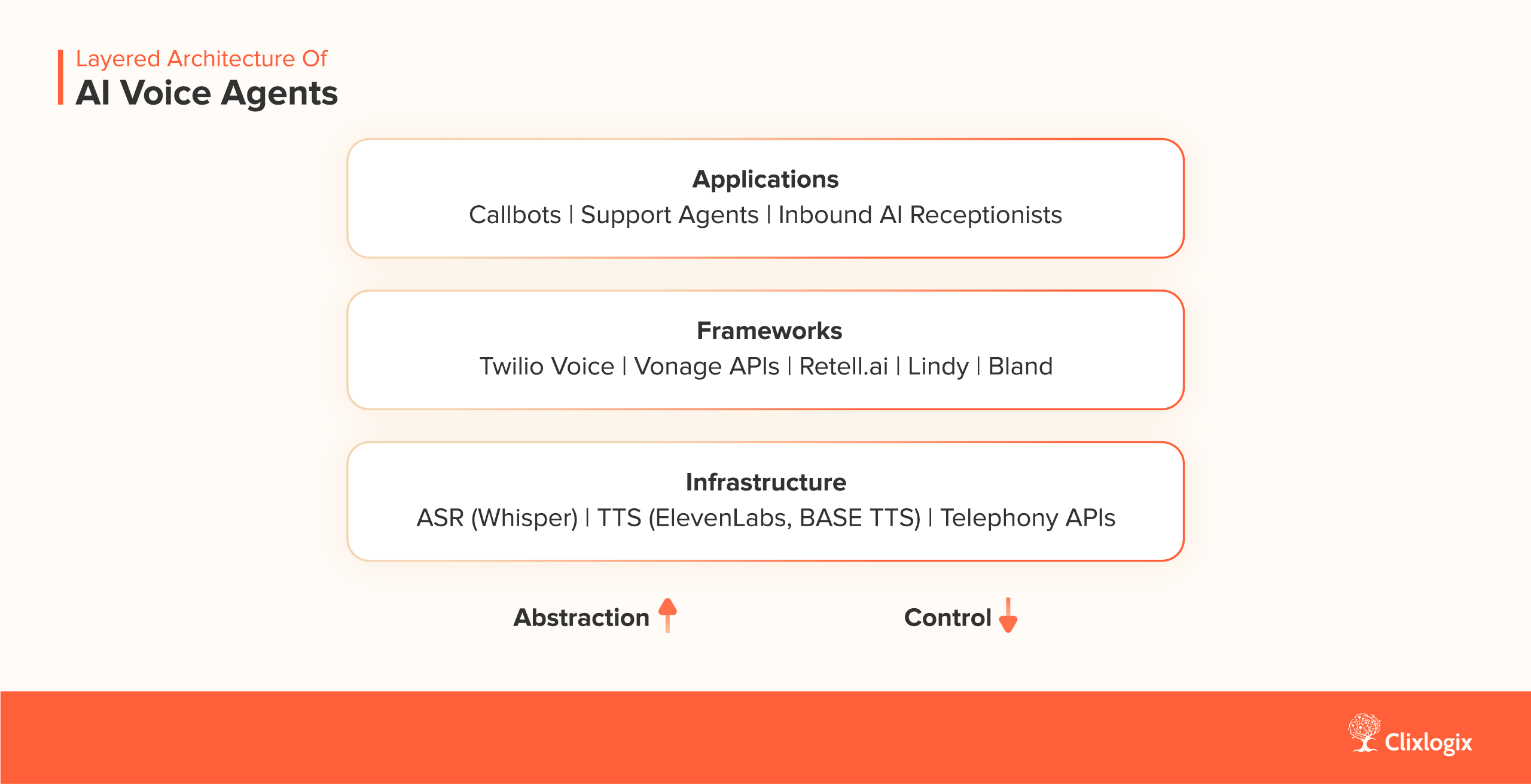

Figure 11: Layered Architecture of AI Voice Agents

This stack separation defines where innovation leverage lives. Framework builders abstract telephony and TTS, but infra teams still dominate latency and noise control.

Understanding where you operate, infra, framework, or app, drives hiring, SLAs, and product velocity.

At Clixlogix, we treat the decision as an architectural boundary. Once drawn correctly, that boundary determines how fast you can evolve when the next layer of the voice ecosystem shifts.

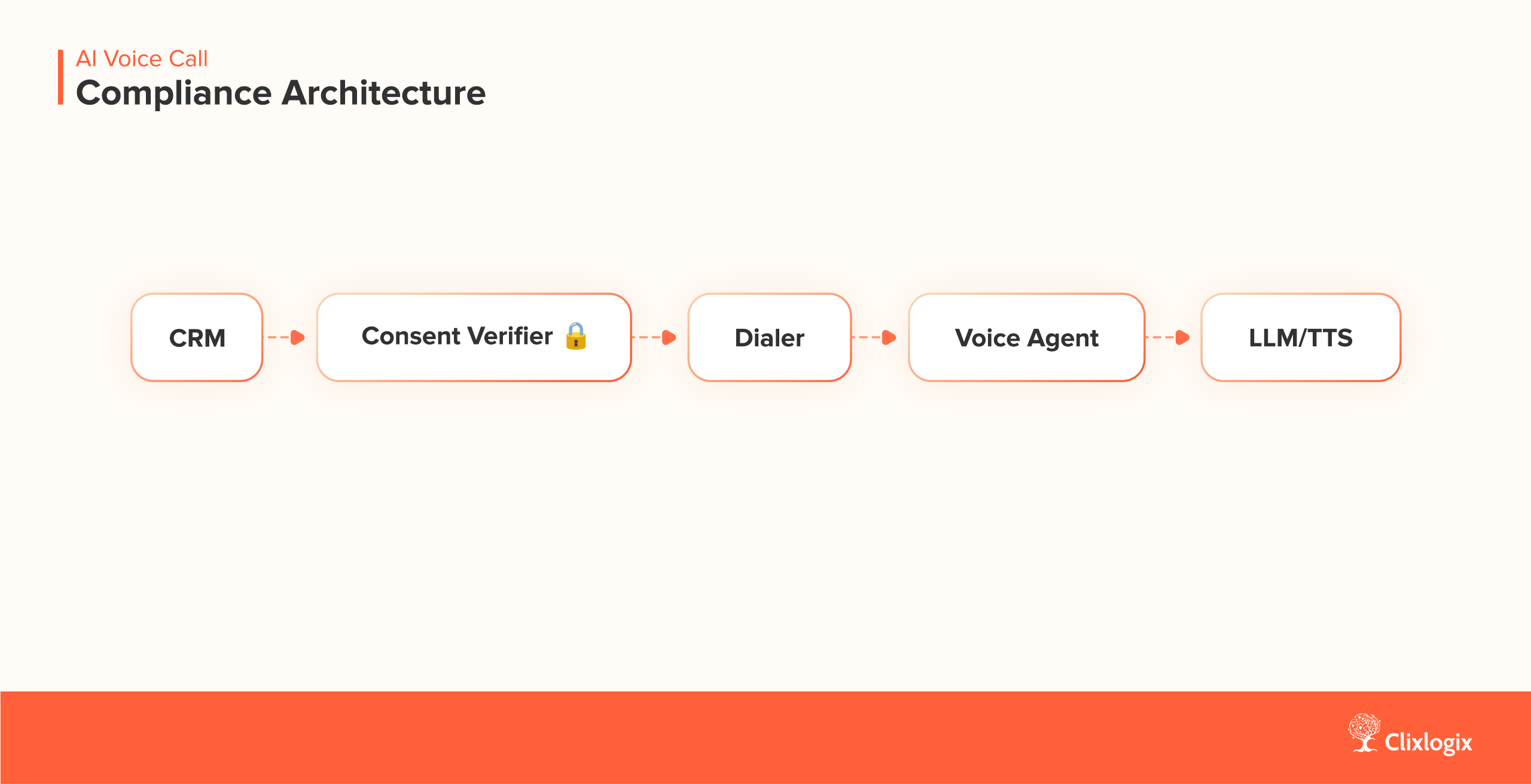

The FCC’s TCPA consent rules for AI voice calls and FTC disclosure proposals require runtime consent checks. Implement a consent gate inside the call flow:

Figure 12: AI Voice Call Compliance Architecture

“AI-generated voice calls fall under prerecorded consent rules.” – FCC, 2024

Consent events should be logged with timestamp and hash ID to verify audit trails. This allows compliance teams to map each call to stored authorization.

Figure 13: Caller Identity to Consent Mapping For AI Voice Agents

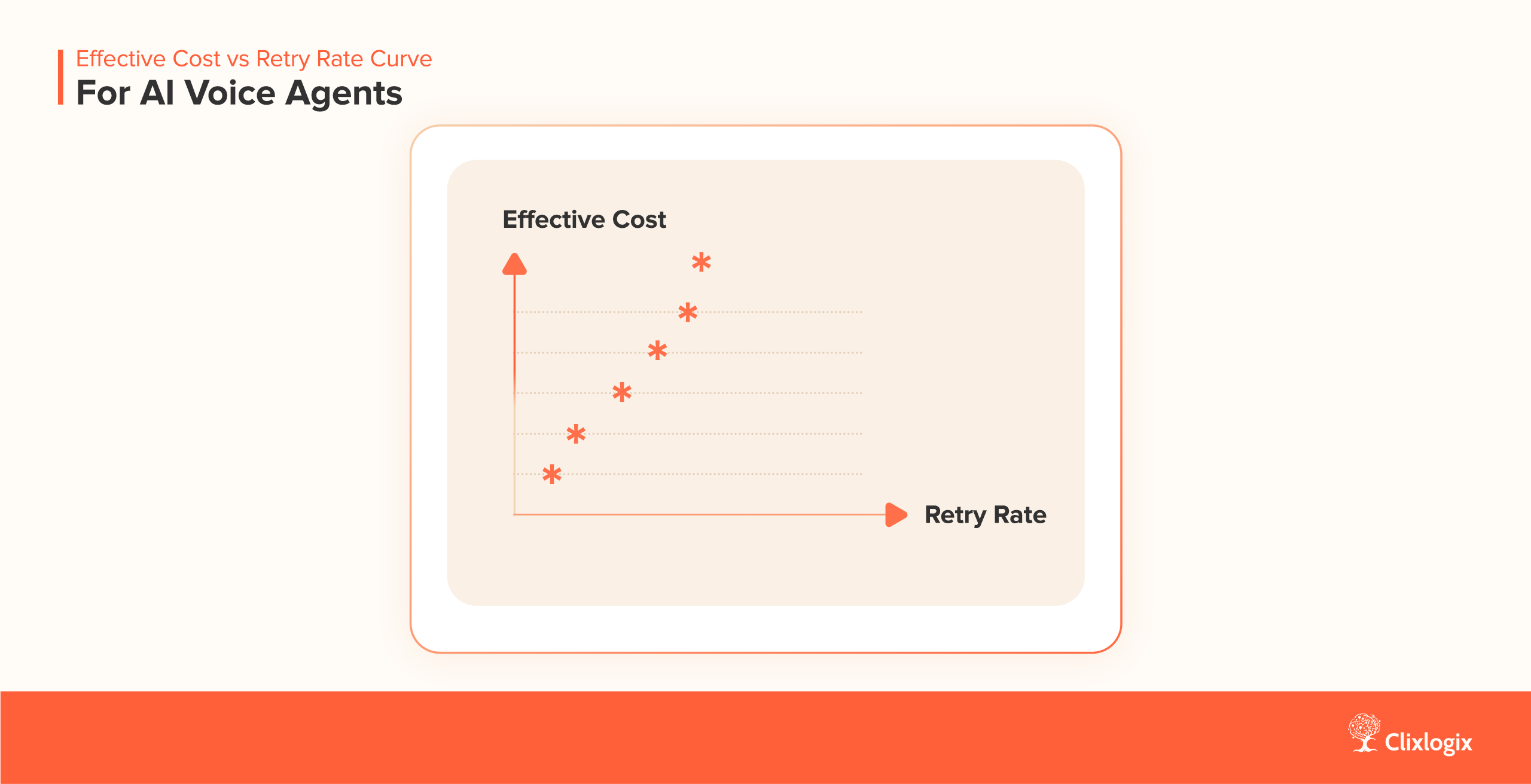

Noise inflates call duration and token usage. The relation is measurable:

Effective Cost = Base ($/min) × (1 + RetryRate × TokenFactor)Streamable TTS architectures lower idle gaps and shorten conversations. With hardware noise budgets shrinking and ASR cost per minute dropping 40% YoY, the economic window for real-world deployment is open now.

Example: A 7 % retry rate and token multiplier of 0.2 raises effective cost by 1.4×. Streamable decoders lower idle gaps, shortening conversations and improving perceived responsiveness.

Figure 14: Effective Cost vs Retry Rate Curve for Voice AI Agents

A 5 % ASR accuracy improvement can yield nearly 8 % cost savings. Managing noise is both an engineering and economic optimization problem.

Experiments like Gibberlink show agents switching from speech to encoded data-over-sound when both sides are synthetic. Human-AI conversations need realism; AI-AI exchanges prioritise efficiency. Future architectures will host both channels simultaneously.

Emerging research focuses on speech-to-speech LLMs that remove intermediate text transcription. Models like Whisper+GPT Realtime and ElevenLabs Realtime allow direct audio-in/audio-out within 500 ms.

This reduces jitter but introduces new synchronization and observability challenges.

Some vendors also push perceptual realism, tuning suppression not for silence but for natural proximity. Compliance will converge around watermarking and synthetic voice traceability under new AI Acts.

Human ↔ AI : ~~~ Speech Channel ~~~ (Authenticity)AI ↔ AI : === Data-over-Sound === (Efficiency)

[Shared Communication Hub]

“As agents learn to speak less like us and more like each other, silence may become the ultimate signal of intelligence.”

Noise is a first-class concern in AI voice-agent design. It influences clarity, cost, and trust. Building believable systems means budgeting for noise, not eliminating it. The most reliable agents will sound less perfect—but more real.

(See What Is an AI Agent? for background on AI agent fundamentals.)

| Metric | Target / Best Practice |

|---|---|

| SNR | ≥ 20 dB |

| Round-trip Latency | ≤ 600 ms |

| MOS (Noisy train) | ≥ 4.2 / 5 |

| ASR WER | ≤ 10 % |

| Consent Logs | Immutable, hashed |

Building or tuning AI voice agents for real-world performance requires a blend of machine learning, audio engineering, and compliance expertise. Our team at Clixlogix helps enterprises deploy and optimize production-ready voice systems, from ASR pipelines to noise-aware AI agents.

Akhilesh leads architecture on projects where customer communication, CRM logic, and AI-driven insights converge. He specializes in agentic AI workflows and middleware orchestration, bringing “less guesswork, more signal” mindset to each project, ensuring every integration is fast, scalable, and deeply aligned with how modern teams operate.

Store owners in Shopify have noticed something unsettling. Traffic patterns are changing. Pages that used to rank well are getting fewer clicks, even though rankings...

Auto insurance agencies handle a steady flow of work. Emails arrive throughout the day. Calls are returned. Documents are reviewed and filed. Renewals move in...

If you work long enough with n8n and AI, you eventually get that phone call, the one where a client sounds both confused and slightly...